A Dutch study suggests the best computer-aided detection (CAD) system can detect most pulmonary nodules at a low false-positive rate and find additional lesions missed by human readers, but awareness of the varying reliability of different CAD algorithms is crucial to properly evaluate and benchmark system performance.

The pioneering research from the Netherlands has benchmarked CAD performance on the full Lung Image Database Consortium/Image Database Resource Initiative (LIDC/IDRI) database. Unlike other studies that have only used subsets of LIDC data making it difficult to compare CAD systems, this research has compared performance of several different state-of-the-art CAD systems, including a new commercial prototype. At the time of the research, the study was among the first to be conducted across the whole of the largest publicly available reference database (European Radiology, 6 October 2015).

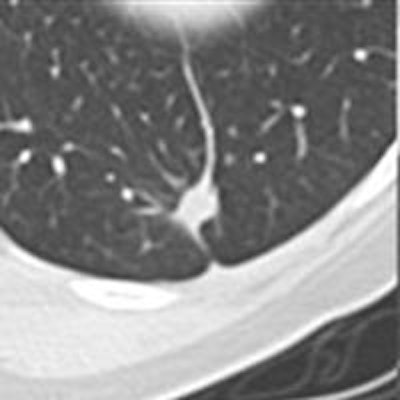

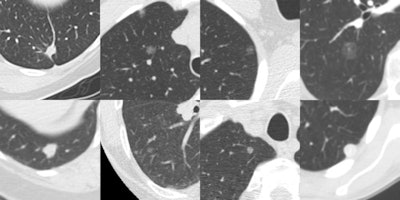

Examples of nodules that were missed by LIDC annotators. These eight randomly chosen examples of solid nodule annotations were marked as nodules 3 mm or larger by all four new readers in the observer experiment. These nodules were not annotated by any of the original LIDC readers. Each image shows a transverse field-of-view of 60 x 60 mm in which the nodule is centered. Next to the fact that the extra nodules were hard to detect, another reason why the LIDC readers missed these nodules may be that they only inspected transverse sections. All figures courtesy of Colin Jacobs.

Examples of nodules that were missed by LIDC annotators. These eight randomly chosen examples of solid nodule annotations were marked as nodules 3 mm or larger by all four new readers in the observer experiment. These nodules were not annotated by any of the original LIDC readers. Each image shows a transverse field-of-view of 60 x 60 mm in which the nodule is centered. Next to the fact that the extra nodules were hard to detect, another reason why the LIDC readers missed these nodules may be that they only inspected transverse sections. All figures courtesy of Colin Jacobs.The positive results of the National Lung Screening Trial (NLST) and the subsequent developments toward implementation of lung cancer screening in the U.S. have renewed the interest in CAD for pulmonary nodules, according to the authors.

"If lung cancer screening will be implemented on a large scale, the burden on radiologists will be substantial and CAD could play an important role in reducing reading time and thereby improving cost-effectiveness. To be able to move CAD to a performance level which is clinically acceptable, it is essential to have good reference databases and reliable evaluation paradigms," noted lead author Colin Jacobs, of the Diagnostic Image Analysis Group at Radboud University Medical Center in Geert, in an email. "However, it is important to realize for radiologists that the quality of CAD algorithms may vary quite a bit."

This study used the LIDC/IDRI dataset consisting of 1,018 helical thoracic CT scans collected retrospectively from seven academic centers. These scans were taken on 16 scanner models from four different CT scanner manufacturers. After exclusion of nine cases with inconsistent slice spacing, or missing slices, and 121 CT scans that had a section thickness of 3 mm and higher, 888 CT cases were available for evaluation.

Besides assessing performance of three state-of-the-art nodule CAD systems, an observer's study investigated whether CAD can find additional lesions missed during the extensive LIDC annotation process. This process involved four radiologists independently reviewing all cases in a first (blind) phase, and in a second unblinded phase, each radiologist independently reviewed their marks along with the anonymized marks of their colleagues. Findings were annotated into nodules 3 mm or larger, nodules smaller than 3 mm, or non-nodule (abnormality in the scan not considered a nodule).

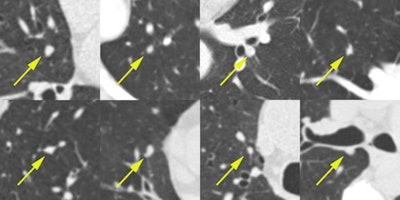

Eight randomly chosen examples of false negatives of Herakles. Each image shows a transverse field-of-view of 60 x 60 mm in which the nodule is centered. Many missed nodules are subsolid.

Eight randomly chosen examples of false negatives of Herakles. Each image shows a transverse field-of-view of 60 x 60 mm in which the nodule is centered. Many missed nodules are subsolid.Three CAD systems were used: a commercial CAD system Visia (MeVis Medical Solutions), a commercial prototype CAD system Herakles (MeVis Medical Solutions), and an academic nodule CAD system ISICAD (Utrecht Medical Center). The performance of each system was analyzed on the set of 777 nodules annotated by all four radiologists as nodules 3 mm or larger. The study used a free-response operating characteristic (FROC) analysis where detection sensitivity was plotted against the average number of false-positive detections per scan. If a CAD system marked locations that were annotated by three or fewer radiologists as a nodule 3 mm or larger, nodule smaller than 3 mm, or non-nodule, these marks were counted as false positives.

Comparative results for CAD systems

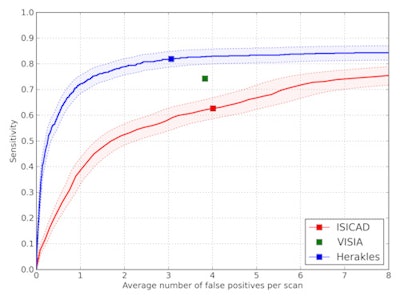

FROC curves showed Herakles performed the best. System performances were significantly different. At its system operating point, Herakles reached a sensitivity of 82% at an average of 3.1 false positives per scan for nodules all four LIDC readers had agreed on. The academic system ISICAD showed sensitivity of around 62% with four false positives per scan, while Visia had a sensitivity of around 73% to 74% and false positives at 3.8 per scan. However, for Visia, no CAD scores were available for the CAD marks. Consequently, only one operating point and not a full FROC curve could be generated.

These results are summarized in the table below.

| CAD system | Sensitivity for nodules ≥ 3 mm | False positives per scan |

| Herakless | 82% | 3.1 |

| Visia | 73% to 74% | 3.8 |

| ISICAD | 62% | 4 |

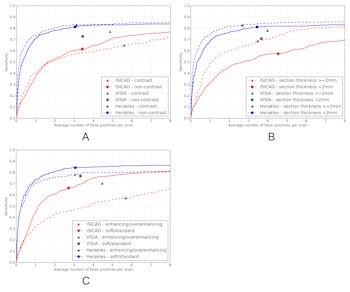

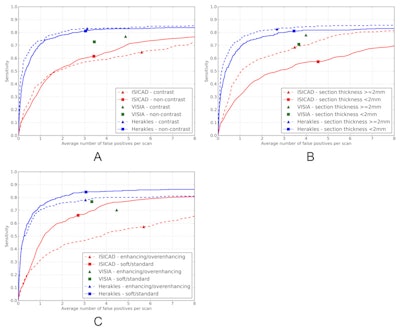

Performance of the three systems was also measured in three different data subsets, by analyzing presence of contrast (noncontrast versus contrast enhanced scans), section thickness (cases with section thickness less than 2 mm versus section thickness 2 mm or greater) and reconstruction kernel (soft/standard versus enhancing/overenhancing kernels).

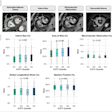

FROC curves for all three CAD systems on the full database of 888 CT scans containing 777 nodules for which all four radiologists classified it as a nodule 3 mm or larger. The points on the curves indicate the system operating points of the three CAD systems. For Visia, no continuous FROC curve but only a single operating point can be provided because the CAD scores of the CAD marks are not available. Shaded areas around the curve indicate 95% confidence intervals.

FROC curves for all three CAD systems on the full database of 888 CT scans containing 777 nodules for which all four radiologists classified it as a nodule 3 mm or larger. The points on the curves indicate the system operating points of the three CAD systems. For Visia, no continuous FROC curve but only a single operating point can be provided because the CAD scores of the CAD marks are not available. Shaded areas around the curve indicate 95% confidence intervals.The performance of ISICAD and Visia was influenced by different data sources. ISICAD showed the largest performance difference between soft/standard versus enhancing/overenhancing reconstruction kernels. Herakles showed the most stable and robust performance for all different data sources and consistently outperformed the other two CAD systems.

FROC curves for all three CAD systems on (a) contrast scans (n = 242) versus noncontrast scans (n = 646), (b) scans with a section thickness < 2 mm (n = 445) versus scans with a section thickness 2 mm or greater (n = 443), and (c) scans with a soft or standard reconstruction kernel (n = 502) versus scans with an enhancing or overenhancing reconstruction kernel (n = 386). The reference set of nodules consists of nodules for which all four radiologists classified it as a nodule 3 mm or larger. The points on the curves indicate the system operating points of the three systems. For Visia, no continuous FROC curve but only a single operating point can be provided because the CAD scores of the CAD marks are not available.

The second part of the study revealed that CAD detected 45 new nodules missed by the human LIDC readers and also demonstrated how some CAD marks labeled false positives were true.

"If a CAD mark is located at a position where the human readers annotated a nodule, this CAD mark is considered a true positive. If there is no human annotation at the position of the CAD mark, this CAD mark is considered a false positive," Jacobs noted. "However, if the human readers missed the nodule at this location, this CAD mark is wrongly classified as false positive. To investigate this effect, we showed all false positive CAD marks to four new human readers to see if we could find additional nodules. The result of this process is that we found 45 extra nodules."

For this, the researchers used the 1,108 false-positive CAD marks from Herakles that had no corresponding mark from any of the LIDC readers. After marks that were obviously not a nodule had been removed by the research team, 269 CAD marks were left for analysis by four new radiologists. Of these, 45 CAD marks were considered to be nodules 3 mm or larger by all four readers. By adding these 45 CAD marks, updated performance of Herakles at its system operating point would reach 83% sensitivity at a rate of three false-positive detections per scan.

The authors offer several reasons as to why these nodules may have been missed the first time: the original LIDC readers only inspected transverse sections, according to the study. Furthermore, the majority of the missed nodules shared characteristics including subtle conspicuity, small size (less than 6 mm) and attachment to pleura or vasculature.

The authors concluded that given the growing interest in and need for CAD in the context of screening, new CAD algorithms would most likely be presented in the near future. They also stated the need for a large database of CT images including follow-up CT and histopathological correlation that would be helpful to remove subjectivity from the reference standard and to verify whether CAD detects the clinically relevant nodules.

"Based on this study, we cannot make any claims about whether CAD performs better than human readers. The individual performance of the four LIDC readers cannot be derived from the annotation data, because this data only provides the final annotation results after the unblinded second phase of the annotation process," Jacobs stated.

However, he pointed to the growing potential and sensitivity of CAD, as well as its system-dependent performance rates: "It was very interesting to see that CAD still found additional nodules that were not picked up by all four experienced radiologists involved in the LIDC reading process and that CAD performance was robust for the commercial prototype, but for the other two CAD systems, the performance varied for different data sources."

The authors would like to acknowledge the National Cancer Institute and the Foundation for the National Institutes of Health, and their critical role in the creation of the free, publicly available LIDC/IDRI Database used in this study and aim to also freely disseminate the results of their own research on a public website, which will include a framework to allow CAD researchers to upload their results and produce the same performance curves found in the study.

"I hope CAD researchers will utilize our website to assess the performance of their algorithm on the LIDC/IDRI database so that we can gain a good insight into the performance of available CAD algorithms," Jacobs noted. "The FROC curves presented in our paper give a good overview of the performance of a CAD algorithm on different input data. Furthermore, CAD researchers may download the results of our observer study to be used in their research."