ChatGPT-4 appears useful for extracting details from reports on thrombectomy procedures in stroke patients, a time-consuming and error-prone task currently performed by human readers, according to a group in Germany.

Such “data mining” by ChatGPT could provide an alternative to manual methods for building stroke registries, with the data being key to improving the quality of stroke care, noted lead author Dr. Nils Lehnen, of University Hospital Bonn, and colleagues.

“Procedural details of mechanical thrombectomy in patients with ischemic stroke are predictors of clinical outcome and are collected for prospective studies or national stroke registries,” the group wrote. The study was published on 16 April in Radiology.

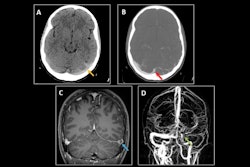

Mechanical thrombectomy for acute ischemic stroke has become the standard of care for patients with large vessel occlusion. Medical staff collect standard details from reports on the procedures and input them in national or local stroke registries to develop benchmarks for best practices, for instance.

OpenAI’s large language models ChatGPT-3.5 and ChatGPT-4 have shown promise in prior studies extracting data from CT or MRI neuroradiology reports, but have yet to be tested on reports of neurointerventional procedures, the authors wrote.

To that end, Lehnen and colleagues collected a set of 100 reports from 100 patients who underwent thrombectomy procedures at their hospital in Bonn. They used 20 of the reports to fine-tune the LLMs to extract the data into comma-separated value (CSV) tables. Procedural details included door-to-groin puncture times, door-to-reperfusion times, and numbers of thrombectomy maneuvers, for instance.

Next, they compared the performance of ChatGPT-3.5 and Chat-GPT-4 to that of an expert interventional neuroradiologist on all 100 reports, as well as from 30 external reports from 30 patients who underwent mechanical thrombectomy at Brigham and Women’s Hospital in Boston.

Only data entries of the LLMs that exactly matched the expert’s readings were counted as correct, the authors noted. Any deviation from the given options in the prompt was counted as false, including synonyms, punctuation marks, or any additional symbols entered by the LLM.

Key findings included the following:

- GPT-4 correctly extracted 94% (2,631 of 2,800) of data entries, which was higher than those extracted by GPT-3.5 at 63.9% (1,788 of 2,800).

- On the 30 external reports, GPT-4 extracted 90.5% correct data entries and GPT-3.5 extracted 64.2%.

“Compared with GPT-3.5, GPT-4 more frequently extracted correct procedural data from free-text reports on mechanical thrombectomy performed in patients with ischemic stroke,” the group wrote.

While the study suggests that GPT-4 could provide an alternative to retrieving these data manually, its poor performance on certain data points warrants caution, the authors noted. Specifically, a total of 169 data entries by GPT-4 were deemed incorrect, of which 19 (11.2%) were due to format errors and 150 (88.8%) were incorrect in terms of content.

“Although GPT-4 may facilitate this process and possibly improve data extraction from radiology reports, errors currently still occur and surveillance by human readers is needed,” the group concluded.

The full study is available here.