Human experts outperformed large language model (LLM) decision support software for CT scan referrals, but adopting this tool may still enhance clinical practice, researchers have found.

The study, findings from which were published on 27 April in European Radiology, aimed to evaluate two LLMs -- GPT-4 and Claude-3 Haiku -- against assessment by independent experts in ensuring appropriate CT referrals.

The research team, led by Mor Saban, PhD, of the School of Health Sciences, Faculty of Medical and Health Sciences at Tel Aviv University in Israel, evaluated the appropriateness of imaging test referrals using the ESR iGuide clinical decision-support system as the reference standard.

For their retrospective study, the researchers extracted CT referral data from electronic medical records and imaging of 6,356 patients who had been treated at several medical centers in different European countries. Demographic and other data collected included age, gender, patient status, clinical background, clinical indications, and referral specialties.

The researchers used independent expert assessments for consecutive CT examination referrals conducted over one to two workdays in 2022. They noted that each referral was evaluated by board-certified radiologists who regularly perform clinical assessments of CT referrals at the participating medical centers.

To evaluate the referrals, two radiologists reviewed each one and entered relevant patient data into the ESR iGuide portal, which provided recommendations on a scale from 1 (not recommended) to 9 (highly recommended). If there was a discrepancy higher than 2 in the appropriateness scores, an arbitrator evaluated it. This output was used as the reference standard.

The study team used the same settings to assess both GPT-4 and Claude-3 Haiku to ensure consistency, noting that they “aimed to gain insights into the models’ baseline performance in recommending imaging studies based exclusively on their preexisting knowledge.”

To systematically address issues with referrals that involved missing or inconsistent data (e.g., body parts not specified, typos, ambiguous notations), the team sorted the data into three major groups: “Medical Test”; “Organ-Body Part”; and “Contrast.” For ambiguous entries, if no clarification was attained through expert consensus, the entry was excluded from analysis.

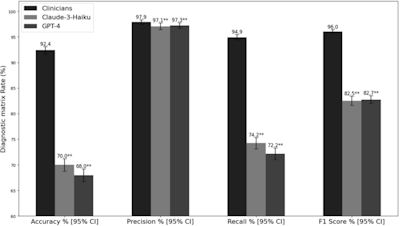

The results from analyses showed that the independent experts had the highest accuracy (92.4%) when compared with the ESR iGuide reference standard for the “Medical Test” category; GPT-4 and Claude-3 Haiku scored 88.8% and 85.2%, respectively.

For the “Organ–Body Part” prediction category, particularly in accuracy and precision, LLMs scored well, although still not as well as the independent expert assessments, with accuracy between 75.3% and 77.8% compared to the 82.6% attained by independent experts. In the “Contrast” group, GPT-4 scored 57.4%, while Claude-3 Haiku fared poorly.

Performance metrics comparison in medical tests identification. Error bars represent 95% confidence intervals calculated using bootstrapping with 1,000 resamples. Statistical significance was determined through comparison with clinicians’ evaluations: *pImage courtesy of Saban et al. and European Radiology.

Performance metrics comparison in medical tests identification. Error bars represent 95% confidence intervals calculated using bootstrapping with 1,000 resamples. Statistical significance was determined through comparison with clinicians’ evaluations: *pImage courtesy of Saban et al. and European Radiology.

The authors concluded that while the independent experts consistently outperformed the LLMs across all categories and metrics, there is a place for LLMs like GPT-4 and Claude-3 Haiku in augmenting decision-making, especially in areas such as anatomical knowledge, with the caution that they require significant oversight, especially given their uneven performance in some areas and metrics, such as “Contrast” predictions.

The authors affirmed that their study “underscores the continued importance of clinical judgment and specialized medical knowledge in navigating the complexities of real-world referral data, which often contains inconsistencies or incomplete information,” which can be augmented by AI software in decision-making.

While the research team denoted limitations to their study that they suggest should be addressed in future studies -- for example, the inconsistencies and missing data in the initial dataset, their intentional exclusion of MRI recommendations -- nevertheless, they expressed that their study emphasized both the utility and challenges of incorporating AI-based software into review and recommendations.

Read the full article here.