The U.K. Royal College of Radiologists (RCR) recently published data revealing that 12% of U.K. hospitals don't have an agreed upon policy for alerting clinicians when x-rays or scans contain critical or urgent findings. Worse still, only 7% of hospital departments surveyed boast an electronic system that not only alerts doctors, but also escalates activity and assures action is taken.

The revelations are an uncomfortable fit with a National Health Service (NHS) whose Five Year Forward View places "quality improvement" at the heart of its aspirations. If the health service really is on "quality street," NHS organizations are going to need to remove the colorful shiny wrappers of strategic mission statements and get their teeth into something with a hard center. Because current approaches are typically soft-centered; the RCR audit suggests the vast majority of U.K. hospitals have inadequate quality systems and processes in place to prevent delayed diagnosis and suboptimal patient management. The implications are significant. The solution, however, is rather pain-free, easy to implement, and proven to work across an entire country. It's time the U.K. unwrapped it.

Sweet success

David Howard. All figures courtesy of McKesson.

David Howard. All figures courtesy of McKesson.In October 2014 an RCR survey1 of radiology departments in England revealed that tens of thousands of suspected cancer patients were being made to wait over a month for scan results due to delays in their reporting. The snapshot study of 50 hospitals showed that 81,137 x-rays, and 1,697 CT and MRI scans had not been analyzed for at least 30 days, raising serious concerns for patient safety.

At the time, the well-documented shortage of NHS radiologists was acknowledged as a contributory factor; 18 months on and, with the U.K. still having one of the lowest populations of trained radiologists, per capita, in Europe, the potential for delayed diagnoses remains high. Clearly, workforce planning is a major challenge in radiology. However, NHS leaders also know they need to reinforce operations with more robust systems to support quality assurance. Failure to do so places patient care at risk. Sadly, despite major advances in technology, there are still examples of discrepancy and avoidable delays in reporting where NHS patients have paid the ultimate price.

So, how can NHS organizations mitigate the risk of delayed diagnoses? One option might be to look at the recent work that's been carried out in the Republic of Ireland, where a radiology quality improvement plan orchestrated by the Health Services Executive (HSE) has transformed radiology services.

The plan -- which involved multiple stakeholders including senior radiologists, the cancer control board, Royal College of Surgeons of Ireland (RCSI), and the HSE -- put in place guidelines and mechanisms to address a long-standing, country-wide challenge of delayed diagnoses. It drew inspiration from the quality processes the American College of Radiology (ACR) had established to support discrepancy management, in particular introducing the element of an automated, fair, and objective peer feedback process to help identify discrepancies and escalate review appropriately as well as areas for quality improvement and learning.

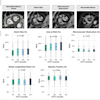

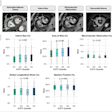

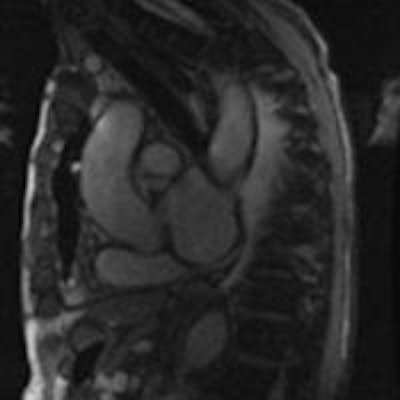

Technology can help boost quality. Here, a reporting radiologist can open the "Critical Results" panel, select either critical, urgent, or unexpected, and can add further details in a comment box, as required. The Critical Results panel gives the option to select a "Communication Method" to alert different people to this finding; they can select who to alert by ticking the appropriate check box. The panel also displays some study info including patient ID, patient name, study, study date, and referring physician. Once the radiologist has selected the relevant check boxes they click the "Submit & Close" button to close the Conserus panel and continue working.

The Irish Radiology Quality Improvement (RQI) scheme is enabled by a centralized system, provided by McKesson, which integrates with all imaging systems being used in local hospitals. That system now functions across the entire country and means that, each day, more than 300 imaging studies in Ireland are peer-reviewed, escalated, and acted upon accordingly. The impact on the patient experience and patient outcomes is unquestionable.

U.K. hospitals can learn much from the Irish experience. With access to good diagnostics widely heralded as the key to cancer survival, hospital trusts (groups) must be proactive to address the critical issue of discrepancy management. Radiology departments undoubtedly need support. Each year, clinical radiologists issue reports on millions of x-rays and scans -- and these reports play a crucial role in the diagnosis and management of U.K. patients.

Although only a small minority of reports will demonstrate urgent or unexpected findings, it's vital those that contain these results are escalated expediently and tracked to ensure communication is complete and the loop is closed. As pressure on NHS resources intensifies, the risk of human error will naturally increase.

As the prospect of seven-day NHS services looms large, it's no surprise the president of the RCR is urging trusts, health boards, and other providers of imaging services to review their processes and ensure the systems used to communicate test results are robust and timely. Technology is there to help, but without appropriate alert systems, escalation management tools, and wholesale interoperability, hospitals will still be exposed to the risk of failure. Yet it can easily be avoided.

The NHS' focus on quality improvement is an essential ongoing requirement. However, in a highly pressurized environment, it can be difficult for NHS staff to support quality initiatives when they typically create additional work that may not be recognized as part of an individual's day job. Historically, quality assurance has relied on highly manual processes that have a major impact on workflow and are prone to human fallibility in busy hospital environments. The shift from quality assurance to quality improvement may be a subtle nuance, but it's one that underlines a collective responsibility among NHS staff and drives the need for collaboration, transparency, and visibility across the enterprise.

Radiologists and their peers need systems and processes that make quality integral to their day jobs without adding to their burden of work. Despite rhetoric to the contrary, utopia is possible.

Major quality

A common misconception within radiology is that quality assurance is too hard; with a national shortage of radiologists and increased pressure on the system, the profession understandably argues that measuring quality takes a disproportionate amount of time and is unsustainable. Likewise, a concurrent belief that the problem can only be solved by the alignment of RIS through a uniform set of quality measurement standards is equally misplaced. These myths must be debunked. The answer will not be dependent on local RIS but on the implementation of a centralized system that sits above it and focuses entirely on quality.

When radiologists need to carry out a "Peer Review," they can open the relevant study and launch the Peer Review panel. They are presented with two categories: Report Accuracy and Report Quality. In report accuracy, they select the appropriate checkbox to agree or disagree with the reporting radiologist and have the option to select an appropriate report quality option ranging from accurate to not at all accurate. Once the radiologist has selected the relevant check boxes, they click the "Submit & Close" button to close the Conserus panel and continue working.

Ireland's Radiology QI scheme endorses this approach. The Irish HSE took the strategic decision to keep quality and clinical information entirely separate. Their rationale was to implement a dedicated system for quality assurance rather than force additional functionality onto the back of existing RIS. The centralized quality system would, however, draw information from existing RIS and be accessible across multiple jurisdictions, locations, and organizations. As such, radiologists from the whole region can now collaborate around quality issues, reinforcing peer review capabilities and ensuring discrepancies are managed efficiently and effectively.

Although public and private healthcare organizations across the Republic of Ireland -- rather like their U.K. counterparts -- use a variety of RIS and PACS, the centralized system joins them together to provide a standardized platform and an optimal mechanism for quality management. The approach keeps quality metrics entirely distinct from clinical information and, crucially, supports radiologists' workflow rather than adds to it.

U.K. hospitals know they must do more to drive quality improvement -- and that, with increased pressure on diagnostic services, radiologists need support to ensure they manage their work burden and mitigate the risk of human error. If, therefore, the NHS really is on "quality street," then it's clear the 93% of hospitals currently lacking robust and dedicated quality assurance systems must strengthen their procedures accordingly.

David Howard is international implementation lead at McKesson Imaging & Workflow Solutions.

Reference

- Campbell D. "NHS patients facing 'unacceptable' wait for scan results." The Guardian. http://www.theguardian.com/society/2014/nov/13/nhs-scan-result-delays-cancer-patients-x-ray. 12 November 2014. Accessed 27 May 2016.

The comments and observations expressed herein do not necessarily reflect the opinions of AuntMinnieEurope.com, nor should they be construed as an endorsement or admonishment of any particular vendor, analyst, industry consultant, or consulting group.