An artificial intelligence (AI) algorithm can identify cirrhosis on standard T2-weighted liver MRI exams at a level comparable to that of an expert reader, offering promise as a tool for improving detection, German researchers reported in a presentation at the virtual 2020 RSNA meeting.

Researchers from the University Hospital Bonn found that their algorithm was feasible for the classification of cirrhosis on routine T2-weighted liver MRI, either with or without prior image segmentation. Classification performance was also slightly higher and comparable to that of a board-certified radiologist.

"This proof-of-concept study demonstrates that a convolutional neural network pretrained on an extensive natural image database allows detection of liver cirrhosis with diagnostic accuracy at expert level in T2-weighted MRI from clinical routine," said presenter Sebastian Nowak, a doctoral student at the University of Bonn.

A global health condition

Cirrhosis is the end-stage of chronic liver disease and is a major global health condition, especially due to the variety of severe complications, according to Nowak.

"Although liver biopsy is the gold standard for the detection of cirrhosis, imaging has a particularly important role in the evaluation of the disease," he said. "This motivated the investigation of deep learning as a method that could objectively assess relevant features of cirrhosis and could therefore support the radiologist in detecting morphological manifestations of the disease."

A drawback of deep learning is the need for a large number of preclassified images for training. However, the concept of transfer learning has proven beneficial if only a small training set is available, Nowak added.

Transfer learning

With transfer learning, researchers can adapt a previously trained model to perform a different pattern recognition task, benefitting from its prior training. In their study, the Bonn group sought to make use of deep-transfer learning to develop an algorithm for detecting cirrhosis.

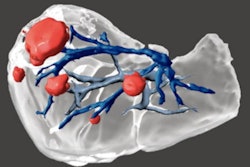

The team retrospectively gathered a study population of 713 patients. Of these, 553 had confirmed diagnosis of liver cirrhosis and 160 subjects had no history of liver disease. For each patient, a single-slice T2-weighted liver MR image at the level of the caudate lobe was exported for analysis. The researchers used 70% of the images for training and 15% for validation; the other 15% were utilized for testing.

Segmentation and classification

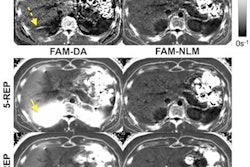

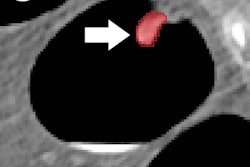

The images were analyzed using two different classification pipelines. In the first model, image segmentation was applied prior to the classification process, while the second algorithm performed classification directly on the unsegmented images.

Each method was trained in two phases. First, the pretrained models were used exclusively for feature extraction, with only the last output layer being trained.

"All pretrained parameters of the convolution layers were kept frozen," Nowak said. "[Then] in the second phase, the pretrained parameters were unfrozen."

The deep-learning pipeline included a segmentation network and a classification network, both of which were pretrained using the ImageNet database. The researchers compared the performance of the algorithms with that of a radiology resident with four years of experience in abdominal imaging and a board-certified radiologist with eight years in abdominal imaging.

During testing, the segmentation network yielded 99% accuracy.

| Performance for detecting cirrhosis on T2-weighted liver MRI exams | ||||

| Radiology resident | Board-certified radiologist | Deep-learning model (with unsegmented images) | Deep-learning model (with segmented images) | |

| Accuracy | 91% | 90% | 95% | 96% |

| Balanced accuracy | 92% | 92% | 90% | 92% |

"Remarkably, subsequent fine-tuning by un-freezing pretrained parameters of the [convolutional neural networks] did not lead to significant improvements in segmentation or classification performance," Nowak noted.

Heat maps

On the test set, the researchers generated gradient-rated class-activation maps (GradCam) to visually show on the elements of the image that affected the model's predictions. For both models, the right hepatic lobe was the most relevant area, followed by the caudate lobe, according to Nowak.

Interestingly, the Grad-Cam analysis also showed that on the unsegmented images, the abdominal areas outside of the liver were also relevant to the model's decisions.

"This analysis motivates further studies to investigate if deep learning may also detect accompanying effects of cirrhosis, such as spleen hypertrophy or venous alterations," Nowak noted.

He also acknowledged several limitations to their work. First, the model was trained only to identify liver cirrhosis and doesn't support differentiation of lower stages of liver fibrosis, he said.

"That will be the next step," he said.

Also, their method is based only on 2D images and can't currently be used to classify images directly on CT image data, Nowak added.