Dear Artificial Intelligence Insider,

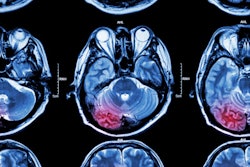

One of the big challenges in developing high-performing radiology artificial intelligence (AI) algorithms is having access to a large image dataset with high-quality labels for training. A group from Switzerland found a way around that problem while developing deep-learning models to detect clinical stroke lesions on MRI.

To increase the size of their training set, the developers used synthetic images that were produced by extracting stroke lesion features from clinical cases and then combining them with normal exams. After 40,000 of these synthetic images were added to the training dataset, an AI model was able to achieve over 90% sensitivity for detecting stroke lesions on a separate test set.

In a presentation at the recent European Society of Cardiology virtual conference, Japanese researchers described how a deep-learning algorithm could yield a promising level of accuracy for diagnosing heart failure on chest radiographs.

Despite a slower-than-expected uptake of AI-based clinical applications for use in medical imaging and the impact of the COVID-19 pandemic, the world market for these technologies is expected to reach 1.3 billion euros by 2024, according to a new report from Signify Research. In an article for AuntMinnieEurope.com, senior market analyst Sanjay Parekh, PhD, describes why growth is projected to accelerate as the pandemic subsides and confidence in AI-based clinical solutions increases.

Deep learning-based computer-aided detection (CAD) software can identify patients with mild cognitive impairment due to Alzheimer's disease, potentially contributing to efforts toward the creation of a prognosis prediction system, researchers from Turkey recently reported. Also, a French group announced that AI software could perform almost as well as senior radiologists for evaluating breast density and five-year cancer risks among women in France.

Meanwhile, two leading European informatics experts have warned that the introduction of AI into daily radiology workflow is being hindered by the lack of high-quality annotated datasets, the difficulty of proving technical validation, and the absence of standards for data sharing between digital systems.

Is there a story you'd like to see covered in the Artificial Intelligence Community? Please feel free to drop me a line.