Researchers from Spain have developed a technique that allows users to visualize and interact with head MRI and CT scans as 3D models using virtual reality (VR) and augmented reality (AR). The technique relies on proprietary software applications specifically designed for examining radiology images.

The group's visualization approach features three different software applications that can convert standard 3D anatomical models typically viewed on a 2D screen into files compatible with VR and AR technologies (Journal of Medical Systems, 14 March 2019).

The study shows how clinicians can integrate VR and AR into the radiology workflow and have access to a more realistic, comprehensive display of brain and skull anatomy, first author Santiago Izard, a doctoral student at the University of Salamanca and CEO of VR/AR start-up ARSoft, told AuntMinnieEurope.com.

"In this moment, it is not usual to create 3D models [for VR and AR] due to the long processing time and additional costs it demands," he said. "The key clinical implication of the system we are implementing is to allow doctors to work with 3D models created from radiological results cheaply and very easily."

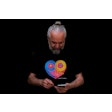

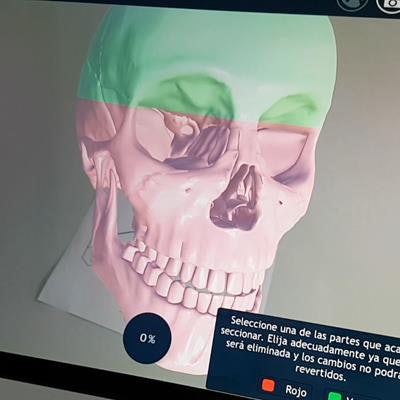

A user interacts with a 3D model of the cranium using AR technology. All images courtesy of Santiago Izard.

A user interacts with a 3D model of the cranium using AR technology. All images courtesy of Santiago Izard.Beyond traditional imaging

Technological advancements in recent years have led to major improvements in graphics processing and rendering capabilities, paving the way for the visualization of medical images as complex 3D models, according to the authors. Although many computer programs can generate 3D models from 2D medical images, few are exclusively geared toward the examination of radiology images using VR and AR.

One of the major challenges with integrating high-quality 3D anatomical models into VR and AR is that the models generally consist of too many polygons for VR and AR devices to handle, they noted. Most computer programs reduce the number of polygons that make up a model to be able to visualize it in VR and AR, but this comes at the cost of image quality.

Seeking to address this barrier, Izard and colleagues obtained head MRI and CT scans and used segmentation software to select regions of interest on the scans and turn them into 3D meshes. Next, they reduced the complexity of the 3D meshes by applying the marching cubes algorithm.

After simplifying the 3D meshes, the researchers input them into one of three distinct software applications they designed using computer software (Unity3D, Unity Technologies):

- VR Viewer: Produces 3D models compatible with VR headsets (Oculus and Samsung Gear VR, Oculus)

- AR Viewer: Allows for the visualization and manipulation of 3D models with Android and Apple devices

- PC Viewer: Works with a USB motion sensor (Leap Motion controller, Leap Motion) to allow users to interact with 3D models on a monitor using hand motions

These software applications help improve the final step in the process of examining radiology images using VR and AR, making complex 3D models compatible with VR and AR devices without compromising image quality, the authors wrote. Ultimately, the technologies offer "fast and efficient interaction, including rotating, scaling, or cutting the 3D models [to view] complex internal structures. In addition, this system can be used by clinicians to store and explore clinical neuroimages from different locations."

A user manipulates a 3D model of the brain with hand gestures.

A user manipulates a 3D model of the brain with hand gestures.Automated segmentation

Looking ahead, the researchers proposed a way to overcome yet another barrier to using VR and AR for viewing radiology images: the time-consuming process of image segmentation. Their proposal involved using artificial intelligence (AI) algorithms to automatically segment regions of interest on MRI and CT scans.

"We are currently working on creating and implementing advanced AI algorithms for image segmentation," Izard said.

The algorithm they are developing relies on a cellular neural network -- a computing paradigm similar to neural networks -- to detect key areas for automated segmentation.

"Our tool fully integrates AR and VR technology with radiological imaging and is specifically designed to study radiology-based results and even plan surgeries," he said. "These technologies allow [users] to interact with 3D models in a realistic way without having to print them, saving time and money."