LONDON - Radiology urgently needs effective vendor-neutral peer feedback and peer review systems for reported imaging studies that are easy to document and share, Dr. Nicola Strickland, president of the Royal College of Radiologists (RCR), told attendees last Friday at the British Institute of Radiology (BIR) annual congress.

In an interview with AuntMinnieEurope.com, she outlined what she sees as the difference between these two terms that underpin her message. Essentially, peer-feedback learning is an informal way of electronically feeding back information to the report writer to enable learning from the case, while peer review is a judgmental process assessing the accuracy of a previously issued report.

RCR President Dr. Nicola Strickland.

RCR President Dr. Nicola Strickland."There is no point documenting that peer review has been performed just for the sake of it -- it should be accompanied by a nonthreatening learning process. A peer-review process can be used on occasion in a formal manner, for example if a radiologist's competency is called into question," noted Strickland, consultant radiologist from Imperial College Healthcare National Health Service (NHS) Trust, London.

A means of easy electronic peer feedback would benefit all radiologists, she pointed out.

"It might be painful on occasion when you find you have misinterpreted something, but you'd rather know [about discrepancies] than not know, because by being informed you are less likely to do it again," she said, adding that peer feedback also meant they could tell their colleagues when they have done really well. "It means we can easily communicate praise when they have made a correct difficult diagnosis, proven by subsequent investigation."

Peer review failures

Strickland stressed that radiologists in the NHS were already doing a huge amount of routine incidental peer review in their everyday reporting and multidisciplinary teamwork. However, this activity was not documented and often not fed back to the author of the report because there was no simple way of doing so, and thus the learning part of the review often failed to happen. Due to high workload, there was insufficient time to carry out and log peer review or feedback formally.

She urged colleagues to speak up for themselves and make it clear they already do a lot of peer review in their everyday work. It would be helpful to work with vendors to develop digital peer feedback systems that work between different PACS/RIS systems. This would "complete the loop" and turn incidental review into learning for the radiologist who created the report that had been reviewed, she said.

This recent interest in peer feedback and review had assumed importance in light of claims by private teleradiology companies that they routinely peer review a certain percentage of their reports, and follow best practice, according to Strickland. With advances in information technology, conventional radiology has also reached a stage where it should be able to offer peer feedback learning across different imaging IT systems and between district and teaching hospitals, which now often take part in shared regional multidisciplinary team meetings.

"We are doing the same amount of peer review as the teleradiology companies but we are not documenting this," she asserted.

To illustrate this point, Strickland noted that every time radiologists participate in a multidisciplinary team meeting they inevitably peer review all the imaging studies discussed.

"Every time a clinician discusses a case with me at a workstation, or when I am reporting and looking back at previous imaging studies with reports that have already been issued by my colleagues, then I am undertaking peer review as part of my work, but this process hasn't been documented as peer review and no learning from feedback has been achieved," she added.

RADPEER peer review system

Ultimately, the aims of effective peer review and peer feedback should be to improve patient care, minimize patient harm, share learning from discrepancies, reduce further discrepancy, and improve departmental quality assurance. Essentially, it should seek to avoid a blame culture that only engenders fear and mistrust.

|

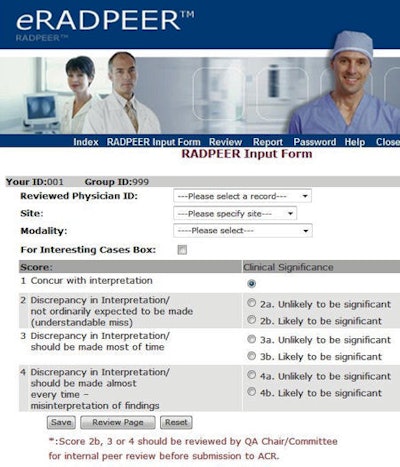

| Screenshot from the eRadPeer system. Image courtesy of the ACR. |

In the U.S., the American College of Radiology (ACR) has introduced the RADPEER peer review system across the country, which attempts to integrate peer review into the normal clinical reporting process. However, there are issues with it because it does not provide feedback learning for the report author, and the point of this system seems to be that participating departments in the U.S. receive a form of financial reimbursement by engaging in this peer review system, Strickland said. Furthermore, anonymous surveys have shown that RADPEER is not considered very highly by radiologists using it.

"Their initial aims were probably laudable, to show that departments were undergoing a proper quality assurance process, and wanted reassurance that they were providing a safe service and stimulating service improvement," she said. "Unfortunately, the RADPEER system hasn't delivered on this and serves merely as a pointless administrative exercise which does not advance learning."

She said that some of RADPEER's biggest flaws are its use of a numerical scoring system without free text, a culture of fear and mistrust generated amongst radiologists because the results of the reviews are sent back to their chiefs of service, and the lack of any feedback to the radiologists who had issued the reports, meaning that no learning took place as a result of the RADPEER reviews.

"Now RADPEER is established in the U.S. and departments are being reimbursed for using it, it is hard to change or remove it," Strickland said, adding that RADPEER does not accommodate consensus of opinion. "It could be that the reviewer is incorrect. Who's to say that the person who issued the report is wrong and that the reviewer is right? One big problem is that the reviewing radiologist has more information to hand in the form of a later imaging study, so it is not a level playing field upon which to base judgments."

One study evaluating RADPEER reports that some cases were rescored and 80% were found to have no discrepancy when checked. Surveys of U.S. radiologists using the system also report they thought RADPEER reviews were "done to meet regulatory requirements" and were a "waste of time," she noted.

Peer review system for the future

In designing a potential radiology peer feedback and review system for the U.K., Strickland thinks the prime considerations were that it must avoid a blame culture and embody a process whereby radiologists learn from any discrepancies and subsequent findings. She would also insist on anonymity of the report author on the rare occasions when a formal judgmental peer review process had to be undertaken on a particular radiologist whose work standard had been called into question.

"I would like to see free text and the ability to message somebody about a case with one click to encourage learning from peer feedback. Interestingly, there are a number of PACS vendors that have this facility, and we have this at Imperial," she said. "Our system provides the recipient with a message notification on their screen that allows them to launch the relevant informally peer-reviewed report and images with a single click, and have another look at them when they receive peer feedback from a colleague."

She explained this one-click function made it far more convenient than an alternative where a radiologist would need to email the report author who would be required to launch their PACS, type in details to call up the relevant imaging study, and so on.

"People are too busy for this. Ultimately, the aim is to have this operative across different PACS and RIS vendors so that learning from peer feedback can occur amongst radiologists working in different hospitals with different makes of imaging IT equipment," she said.

Currently, this type of system only operates within a vendor's own system, not between different vendors, she added.

Strickland hopes the topic of designing a vendor-neutral peer feedback system will be on the work agenda for the RCR's Radiology Informatics Committee (RIC) meeting. It should be facilitated if regional Network Teleradiology Platforms are implemented in the U.K., as detailed in the RIC's recently released e-document: "Who shares wins: Efficient, collaborative radiology solutions."