Better education programs are needed to correct the serious problem of variability in radiologists' BI-RADS assessment interpretation in population-based breast cancer screening, Spanish researchers have recommended.

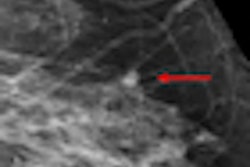

Mammography is not a perfect screening method and efforts have been made to improve its accuracy, such as double reading, but few studies have analyzed observer variability in mammography interpretation using BI-RADS assessment as well as breast density categories.

In a study published online on 19 September in the British Journal of Radiology, researchers assessed inter- and intraobserver agreement regarding assessment and breast density in a breast cancer screening program in Barcelona, Spain. They also investigated the association between female characteristics and BI-RADS discordance.

In a stratified random sample of 100 mammograms, 13 histopathologically confirmed breast cancers were found, along with 51 true-negative and 36 false-positive results, according to principal investigator Dr. Xavier Castells, PhD, from Servei d'Epidemiologia i Avaluació, Hospital del Mar - Parc de Salut Mar, in Barcelona. Twenty-one expert radiologists from radiological units of breast cancer screening programs in Catalonia, Spain, reviewed the mammography images twice within a six-month interval.

The readers described each mammogram using BI-RADS assessment and breast density categories. Inter- and intraradiologist agreement was assessed using percentage concordance and the kappa (κ) statistic.

The researchers found fair interobserver agreement for the BI-RADS assessment (κ = 0.37, 95% confidence interval [CI]: 0.36-0.38). When the categories were collapsed in terms of whether additional evaluation was required (categories III, 0, IV, V) or not (I and II), moderate agreement was found (κ = 0.53, 95% CI: 0.52-0.54).

Intraobserver agreement for BI-RADS assessment was moderate using all categories (κ = 0.53, 95% CI: 0.50-0.55), and substantial on recall (κ = 0.66, 95% CI: 0.63-0.70).

When it came to breast density, inter- and intraradiologist agreement was substantial (κ = 0.73, 95% CI: 0.72-0.74 and κ = 0.69, 95% CI: 0.68-0.70), according to the researchers.

"Although the most frequent disagreement in BI-RADS assessment was found between categories I or II and category III, a nonnegligible discordance between categories I or II and categories IV or V was found, especially in cases of cancer," the researchers wrote.

Previous studies have also reported fair to moderate variability in interobserver mammographic interpretation, but these studies were performed in different settings -- they sometimes included diagnostic and screening mammograms, the breast disease status varied greatly, only some studies used BI-RADS classification, and they presented variations in the sample sizes and the experience of participating radiologists.

The current study was conducted on a subsample of an earlier study, and the researchers attempted to balance a high number of experienced radiologists with a sufficient number of mammograms and a period between readings long enough to avoid memory bias.

There is more agreement between adjacent categories than between distant categories, which may be positively interpreted for breast density, but disagreement between adjacent categories of BI-RADS assessment may be very relevant.

"BI-RADS assessment categories were ordered according to their positive predictive value but, for example, disagreement between categories II and III means that one of the radiologists has detected a benign lesion and finds no reason to recall and the other has found a probably benign lesion and recommends further assessment," the authors noted. "In fact, the information that females get, which is what modifies the next step, is recall for further assessment."

Castells and colleagues found when analyzing concordance between no recall (categories I and II) and recall that although they are the lowest, the frequencies of disagreement between no recall and categories IV and V (suspicious of malignancy) are nonnegligible.

"This is especially important in the group of 13 females with cancer: Of 2,730 possible pairs of radiologists, 88 pairs would have disagreed on recall," they wrote. "This clearly supports the need for double reading in population-based screening programs."

The authors admit there are some limitations to their study. The observed agreement might be different from the overall agreement within a population-based breast cancer screening program as breast cancer cases are overrepresented. Also, they did not measure the agreement before and after the BI-RADS instruction session, so they don't know whether it had any effect on agreement and they don't know whether agreement could be affected by the lack of information on the subjects' characteristics or access to prior mammograms, which readers may have had in the context of a population-based screening program. Lastly, it would have been interesting to know the agreement among radiologists taking into account the different types of lesions and breast density.

Double reading should be recommended in the context of population-based screening programs while radiologists' variability in the BI-RADS assessment interpretation is not reduced, the researchers concluded.