Breast imaging reports generated with automated speech recognition software are eight times more likely to contain major errors than those generated with conventional dictation transcription, and radiologists must be alert to this potential problem, according to new peer-reviewed research.

Many medical imaging departments have adopted or are in the process of adopting automated speech recognition (ASR) as an alternative to conventional dictation transcription -- in part to reduce report production time and thus save money. However, a new study published in the American Journal of Roentgenology suggests that automated speech recognition users must be vigilant, carefully editing reports generated by the technology not only to ensure quality patient care, but also for professionalism, according to Sarah Basma, from Women's College Hospital in Toronto, and colleagues(AJR, October 2011, Vol. 197:4, pp. 1-5).

"A radiology report is in many respects the single most important factor by which radiologists are judged by their clinical colleagues," the authors wrote. "The abundance of grammatical errors found in this study can be perceived as a lack of professionalism and of carelessness on the part of the reporting radiologists."

Basma and colleagues reviewed breast imaging reports from January 2009 to April 2010, looking for major and minor errors. Of the 615 reports used in the study, 308 were generated with automated speech recognition and 307 with conventional dictation transcription. Thirty-three speakers produced the 615 reports, with 11 of them using both automated speech recognition and conventional dictation transcription.

The researchers defined as "major" those errors that affected the understanding of the report and, thus, patient care.

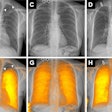

Basma's team found that at least one major error was discovered in almost a quarter of the speech recognition reports (23%), while only 4% of reports generated by conventional dictation transcription contained errors. Training or English language proficiency were not significant error factors: Error rates didn't differ much between reports generated by staff radiologists and trainees, or between those generated by native English speakers and those whose native language was not English.

Frequency of major errors in breast imaging reports by report method

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Table courtesy of the American Journal of Roentgenology. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

The group did find that the imaging modality was a predictor of major errors in final reports. Major errors were more common in breast MRI reports (35% of ASR, 7% of conventional reports). The lowest error rates were found in reports of interventional procedures (13% of ASR, 4% of conventional reports) and mammography (15% of ASR, no conventional reports). "Findings" was the most common report section for errors in both ASR-generated and conventional reports.

Why are there more errors in reports generated by automated speech recognition? Perhaps it's the immediacy of the technology that contributes to error, or a lack of awareness of the high prevalence of errors in speech recognition reporting, according to the authors.

"It has been suggested that reviewing reports between six and 24 hours after dictation may be helpful in detecting errors that can be missed when reports are verified immediately, as with ASR," they wrote. "[And] with pressure to decrease reporting times, radiologists unaware of the high frequency of errors associated with ASR may only superficially edit reports before signing them."