Use of a gamma camera to image sentinel lymph nodes during cancer surgery can help the surgeon assess the extent of cancer spread, and could help reduce mortality rates. Portable gamma camera systems for this application are already commercially available, but one key challenge of this approach is determining the exact spatial location of the recorded signal.

Speaking at the recent MediSens conference in London, John Lees from the University of Leicester described how combining gamma and optical imaging could solve this localization problem. "We can use hybrid gamma and optical imaging to improve diagnosis," he explained. "The idea is to take a small camera into the operating theater and help improve treatment outcomes for patients."

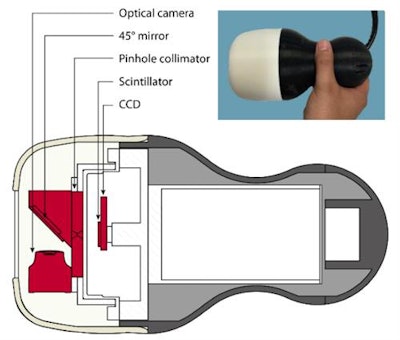

Lees and colleagues have created a handheld hybrid gamma camera (HGC) based upon a high-resolution CCD chip coated with a columnar caesium iodide scintillator, located behind a pinhole collimator. The columns in the scintillator act as light pipes, channeling the light onto the CCD and maintaining a high spatial resolution. The device integrates an optical camera aligned to provide the same field-of-view.

The hybrid gamma camera. Image courtesy of John Lees.

The hybrid gamma camera. Image courtesy of John Lees.The team tested the HGC using a hot-spot phantom filled with radioactive solution, and observed that the camera could resolve features as small as 1 mm. They also determined the sensitivity was good enough: "We could detect down to 25 kBq in about one minute," Lees said.

Lees described a contrast-to-noise ratio (CNR) analysis performed on a head-and-neck phantom, using cameras with 0.5 and 1 mm pinhole collimators. After imaging for just 10 seconds, the HGC could identify inserts with 0.1 and 0.2 MBq activity at the parotic gland level, and 0.5 and 1.0 MBq signals at the submandibular level. In another example, imaging a thyroid phantom filled with Tc-99m demonstrated the HCG could visualize the thyroid after just 200 seconds, with greater detail appearing as scan time increased.

Clinical transition

The next step involves testing the HGC in the clinic. "Last year, we got ethical approval to undertake a clinical evaluation of the camera with patient volunteers," Lees explained.

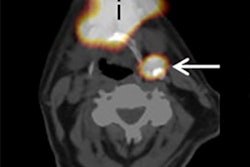

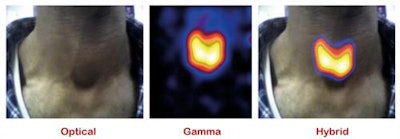

A clinical study of thyroid gland imaging, showing optical, gamma, and hybrid images of the neck at 100 mm (300 seconds acquisition time) 1 hour 50 minutes after injection of I-123 NaI. Image courtesy of John Lees.

A clinical study of thyroid gland imaging, showing optical, gamma, and hybrid images of the neck at 100 mm (300 seconds acquisition time) 1 hour 50 minutes after injection of I-123 NaI. Image courtesy of John Lees.The team has already demonstrated the HCG can perform combined optical/gamma imaging of the thyroid gland in patients. "We've got sensitivity, portability, and spatial resolution," Lees said.

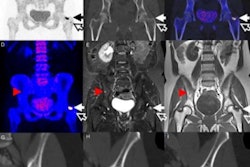

He also presented a recent lymphoscintigraphy image in which the HGC clearly visualized the lymph nodes.

Lees also shared an image from a lacrimal drainage study. A couple of hours after administering 1 MBq of the radiopharmaceutical Tc-99m DTPA, the hybrid image (using a 5 minute acquisition at 7 cm from the patient) clearly showed the path of lacrimal drainage.

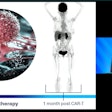

"We are also looking at preclinical imaging and pushing our camera to higher resolution," Lees said. With this aim, the researchers have demonstrated the HGC can image radiopharmaceutical in a mouse, with a 5-minute acquisition at a distance of 12.5 cm. He noted the image quality was comparable with that recorded by the U-SPECT preclinical imaging system.

The next steps

Looking further ahead, one potential evolution of the hybrid camera could be to combine radionuclide imaging with near-infrared (NIR) fluorescence imaging, using an integrated gamma/NIR camera. "There is a lot of interest in taking a radiolabeled tracer and combining it with a fluorescent tracer," Lees said. Such an approach could be employed in both preclinical and surgical applications.

He presented images of a four-hole phantom recorded using optical, gamma, hybrid optical/gamma, and NIR fluorescence. Combining all three modalities should provide exceptionally good spatial resolution. He also showed the first examples of in vivo radio-NIR fluorescent imaging in a mouse.

Finally, Lees described the use of two cameras to perform depth estimation. He presented an image of a breast phantom containing two radioactive Tc-99m sources. The positions of the sources were apparent, but a single image could not provide information as to their depths. By using two cameras, and taking a sequence of optical and gamma images, it was possible to estimate the depth of the two sources. "We believe that we can also do this inside the body," he noted.

Lees was asked by an audience member as to the possibility of adding another modality, such as ultrasound, to the camera. "Ideally, I would like to combine optical, gamma, NIR, and ultrasound," he responded. "But the technology needed to combine them is not straightforward."

© IOP Publishing Limited. Republished with permission from medicalphysicsweb, a community website covering fundamental research and emerging technologies in medical imaging and radiation therapy.