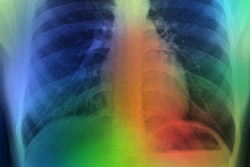

Radiologists outperformed four commercially available AI algorithms when diagnosing lung diseases on chest x-rays, with the algorithms limited in complex cases, according to a study published on 26 September in Radiology.

The Achille's heel for AI may be its inability to synthesize clinical information radiologists use on a daily basis, such as the patient’s clinical history and previous imaging studies, wrote lead author Dr. Louis Plesner, of Herlev and Gentofte Hospital in Copenhagen, Denmark, and colleagues.

"We speculate that the next generation of AI tools could become significantly more powerful if capable of this synthesis as well, but no such systems exist yet," the group noted.

While AI tools are increasingly being approved for use in radiological departments, there is an unmet need to further test them in real-life clinical scenarios, according to the authors.

To that end, in this study, the group compared the performance of four commercially available algorithms -- Annalise Enterprise CXR (Annalise.ai), SmartUrgences (Milvue), ChestEye (Oxipit), and AI-Rad Companion (Siemens Healthineers) -- compared with the clinical radiology reports of a pool of 72 radiologists. The dataset included 2,040 consecutive adult chest x-rays taken over a two-year period at four Danish hospitals in 2020. The median age of the patient group was 72 years.

Of the sample chest x-rays, 669 (32.8%) had at least one target finding. The chest x-rays were assessed for three common findings: airspace disease, pneumothorax, and pleural effusion.

According to the findings, the AI tools achieved moderate to high sensitivity rates ranging from 72% to 91% for airspace disease, 63% to 90% for pneumothorax, and 62% to 95% for pleural effusion. However, for pneumothorax, for instance, positive predictive values (PPV) for the AI algorithms -- the probability that patients with a positive screening test truly have the disease -- ranged from between 56% and 86%, compared with 96% for the radiologists, the authors noted.

PPVs were also lower for the algorithms in airspace disease, with PPVs ranging between 40% and 50%.

"The AI predicted airspace disease where none was present five to six out of 10 times. You cannot have an AI system working on its own at that rate," Plesner said, in a news release from RSNA.

Plesner noted that most studies generally tend to evaluate the ability of AI to determine the presence or absence of a single disease, which is a much easier task than real-life scenarios where patients often present with multiple diseases.

"In many prior studies claiming AI superiority over radiologists, the radiologists reviewed only the image without access to the patient’s clinical history and previous imaging studies. In everyday practice, a radiologist’s interpretation of an imaging exam is a synthesis of these three data points," he said.

Ultimately, current commercially available AI algorithms for interpreting chest x-rays don't appear to be ready for making autonomous diagnoses, but they may be useful as tools for boosting radiologists’ confidence in their diagnoses by providing a second look, the researchers added.

"Future studies could focus on prospective assessment of the clinical consequence of using AI for chest radiography in patient-related outcomes," the group concluded.

A link to the full article is available here.