A Google Health study involving nearly 29,000 mammograms from the U.K. and U.S. has provided fresh evidence that artificial intelligence (AI) can be as effective as human radiologists at detecting breast cancer. The findings were published in Nature on 1 January.

Lead author Scott Mayer McKinney and colleagues from Google Health, DeepMind, Imperial College London, the U.K. National Health Service (NHS), and Northwestern University in Evanston, Illinois, U.S., developed a deep-learning AI model that could identify breast cancer on screening mammograms. They evaluated this system using data from almost 26,000 women examined at three NHS hospital groups as part of Optimam, an image database of more than 80,000 digital images extracted from the U.K. National Breast Screening System (NBSS).

The U.S. dataset included mammograms from just over 3,000 women at Northwestern.

This was a research study, not a clinical one, but AI correctly identified cancers from the images with a similar degree of accuracy to expert radiologists, and AI also reduced the proportion of screening errors, according to the authors. They reported a reduction of 5.7% and 1.2% (U.S. and U.K., respectively) in false positives, and a decrease of 9.4% and 2.7% (U.S. and U.K., respectively) in false negatives.

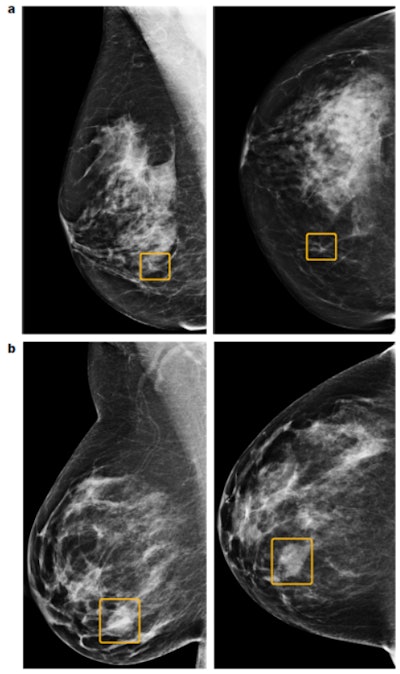

Discrepancies between the AI system and human readers. The top images (a) show a sample cancer case that was missed by all six readers in the U.S. reader study but correctly identified by the AI system. The malignancy, outlined in yellow, is a small, irregular mass with associated microcalcifications in the lower inner right breast. The bottom two images (b) show a sample cancer case that was caught by all six readers in the U.S. reader study but missed by the AI system. The malignancy is a dense mass in the lower inner right breast. Left = mediolateral oblique view; right = craniocaudal view. Images courtesy of Scott Mayer McKinney and colleagues and Nature.

Discrepancies between the AI system and human readers. The top images (a) show a sample cancer case that was missed by all six readers in the U.S. reader study but correctly identified by the AI system. The malignancy, outlined in yellow, is a small, irregular mass with associated microcalcifications in the lower inner right breast. The bottom two images (b) show a sample cancer case that was caught by all six readers in the U.S. reader study but missed by the AI system. The malignancy is a dense mass in the lower inner right breast. Left = mediolateral oblique view; right = craniocaudal view. Images courtesy of Scott Mayer McKinney and colleagues and Nature.Double reading and workload

In a small secondary analysis, the team simulated the AI model's role in the double-reading process used by the NHS. In this process, scans are interpreted by two separate radiologists, each of whom reviews the scan and recommends a follow-up or no action. Any positive finding is referred for biopsy, and in cases where the two readers disagree, the case goes to a third clinical reviewer for a decision.

The simulation compared the AI's decision with that of the first reader. Scans were only sent to a second reviewer if there was a disagreement between the first reader and the AI. The findings showed that using the AI in this way could reduce the workload of the second reviewer by as much as 88%, which could ultimately help to triage patients in a shorter time frame, they explained.

The results are encouraging and insightful, says Prof. Ara Darzi.

The results are encouraging and insightful, says Prof. Ara Darzi.Most of the U.K. exams were carried out at St. George's Hospital London, the Jarvis Breast Centre in Guildford, and Addenbrooke's Hospital in Cambridge. By chance, the vast majority of images used in this study were acquired on mammography systems made by Hologic, and future research should assess the performance of the AI system across a variety of manufacturers in a more systematic way, according to the authors.

"While these findings are not directly from the clinic, they are very encouraging, and they offer clear insights into how this valuable technology could be used in real life," said co-author Prof. Ara Darzi, director of the Institute of Global Health Innovation at Imperial College London and chair of Imperial College Health Partners, in a press statement issued by Imperial on 1 January.

"There will be of course a number of challenges to address before AI could be implemented in mammography screening programs around the world, but the potential for improving healthcare and helping patients is enormous," he stated.

'Impressive' study

Despite some limitations, McKinney and colleagues' study is impressive, and its main strength is the large scale of the datasets used for training and subsequently validating the AI algorithm, wrote Dr. Etta Pisano from the American College of Radiology and Beth Israel Lahey Health, Harvard Medical School, Boston, in a linked commentary.

"A system by which AI finds abnormalities that humans miss will require radiologists to adapt to the use of these types of tool," she pointed out. "However, if AI algorithms are to make a bigger difference than [computer-aided detection (CAD)] in detecting cancers that are currently missed, an abnormality detected by the AI system, but not perceived as such by the radiologist, would probably require extra investigation. This might result in a rise in the number of people who receive callbacks for further evaluation."

In addition, it would be essential to develop a mechanism for monitoring the performance of the AI system as it learns from cases it encounters, as occurs in machine-learning algorithms. Such performance metrics would need to be available to those using these tools, in case performance deteriorates over time, Pisano explained.

Dr. László Tabár, professor emeritus of radiology at Uppsala University Faculty of Medicine in Sweden, said he agrees with Pisano about the need for caution.

"The development of AI should be directed toward finding tumors according to their specific imaging biomarkers," he told AuntMinnieEurope.com on 2 January. "These include the more easily recognizable stellate and spherical tumor masses, but, more importantly, the subtle architectural distortion caused by the diffusely infiltrating breast malignancy of mesenchymal origin (also known as diffuse invasive lobular carcinoma), which is highly fatal and often missed at mammography."

Similarly, cancers developing within the major ducts may cause a massive and often fatal tumor burden as a result of new duct formation (neoductgenesis), which is difficult to detect on the mammogram before it calcifies, Tabár added.

Overall, AI has a great potential to help solve the complex issue of screening asymptomatic women, and it is necessary to be optimistic but also cautious about the potential for AI in real-life breast cancer screening, he noted. The primary purpose of screening is to find and treat the potentially fatal cancers when they are still in a curable stage.

McKinney and colleagues concede that further research, including prospective clinical studies, is required to understand the full extent to which AI can benefit breast cancer screening programs.