AI-enabled software designed to assist in fracture detection has shown good consistency in its accuracy across three leading European university hospitals in a study, but it may still require anatomy-specific adjustments, researchers have found.

A team led by Dr. Bastiaan Van Der Zwart of the Radiology Department of Erasmus Medical Center (EMC) in Rotterdam, The Netherlands, investigated the accuracy and consistency of a CE-marked convolutional neural network-based support program, RBfracture, by Radiobotics, designed as a decision-support tool for appendicular fractures in patients over the age of two.

The group conducted a retrospective study at three university hospitals: Copenhagen University Hospital Bispebjerg and Frederiksberg (BFH), Denmark; Charité Universitätsmedizin (CUB), Berlin; and EMC. It presented the results as a poster at ECR 2025.

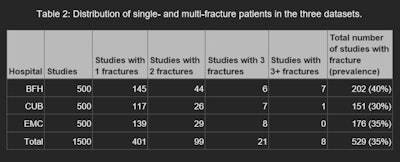

For each hospital, the authors created a dataset of 500 records of patients 21 and older of consecutively sampled radiographic exams for suspected traumatic fractures of the appendicular skeleton and/or pelvis. The data included all available images, clinical referral notes, and radiology reports for each of the exams, as well as reports for any MRI or CT imaging performed within two weeks of the initial radiographs. The anatomical sites included in the datasets were the shoulder, upper arm, forearm, elbow, wrist, hand, fingers, hip, pelvis, upper leg, lower leg, knee, ankle, and feet.

The data were collected consecutively from 30 April 2018 to 30 June 2018 at BFH, from 30 April 2021 to 25 June 2021 at CUB, and from 30 April 2022 to 4 July 2022 at EMC.

To establish the reference standard for the AI, all of the exams and corresponding notes and reports were reviewed by a musculoskeletal radiologist. A reference reader from each hospital reviewed and annotated that hospital’s dataset using the same methodology; all reference readers were blinded to the first radiologist’s annotations. Any disagreements between annotations were adjudicated by an experienced radiologist.

Statistical analysis was performed on both patients and fractures; performance metrics were calculated for each hospital’s data. A fracture was considered detected if there was an overlap between the reference standard and the AI software’s bounding box. Sensitivity fracture-wise (SEFW) was defined as the proportion of unique fractures correctly identified in at least one projection. specificity patient-wise (SPEPW) was defined as the proportion of patients with no fracture detected by the AI software in patients with no fracture according to the reference standard.

The researchers also calculated the average number of false-positive fractures per patient (FPPPFW). In order to assess the value of the tool for triage, they also calculated a triage-specific sensitivity measure (SEPW-triage) -- for example, the proportion of patients in whom at least one fracture was detected.

They also performed subgroup analysis for the imaged anatomy, initially grouped as ankle/foot/toe, arm/elbow, wrist/hand/finger, shoulder, hip/pelvis, and leg/knee. Leg/knee, shoulder, and hip/pelvis did not meet the criteria set forth for a minimum number of positive fractures for all three of the medical centers, so they were excluded from the final analysis.

Source: Dr. Bastiaan Van Der Zwart et al. and presented at ECR 2025.

Source: Dr. Bastiaan Van Der Zwart et al. and presented at ECR 2025.

The RBfracture software showed consistent performance across the three hospitals, with area under the curve (AUC) levels of 0.9, 0.91, and 0.95 for BFH, CUB, and EMC, respectively. However, RBfracture underperformed significantly at wrist/hand/finger fracture detection at one hospital, leading the authors to conclude that the tool may require local validation and possibly anatomy-specific adjustments.

Nevertheless, they wrote, the AI-based RBfracture software “shows potential as a triage tool, especially in high-demand environments like emergency departments.”

The full poster is available on the ECR 2025 site. The co-authors were Huibert C Ruitenbeek, Frederik J. Bruun, Anders Lenskjold, Mikael Boesen, Katharina Ziegeler, Kay-Geert A. Hermann, Edwin Oei, and Jacob Johannes Visser.