The large language model (LLM), GPT-4o, gave a strong showing in translating radiology reports, but its limitations remain significant, a new German analysis has found.

"Translation processing times were highly efficient, with all reports translated in under 25 seconds, demonstrating potential for real-time clinical applications," noted radiologist Dr. Robert Terzis from University Hospital Cologne and colleagues in the European Journal of Radiology (EJR) in an article posted on 28 July. "The most common errors involved untranslated abbreviations and literal translations."

The authors evaluated translations by GPT-4o from German into French, English, Spanish, and Russian. Their aim was to determine the quality of the translations, with emphasis on accuracy, comprehensiveness, and practical feasibility in clinical scenarios.

In a two-center experimental study, the researchers selected a total of 100 anonymized radiology reports (50 from each center) for translation through GPT-4o. Of the 100 reports, there were 20 each from x-ray and ultrasound, and 30 each from MRI and CT.

The team wrote a prompt asking GPT-4o to translate radiology reports from German into English, Spanish, French, or Russian at a C2 proficiency level, maintaining the same structure and language usage as the original report. They then measured the time elapsed between the submission of the prompt and the receipt of the translation from the LLM.

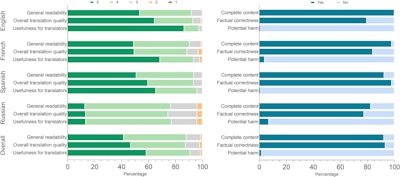

The distribution of Likert scores for ordinal categories and percentage-based nominal values for binary categories, as evaluated by the readers across all languages.Courtesy of Dr. Robert Terzis et al and the EJR.

The distribution of Likert scores for ordinal categories and percentage-based nominal values for binary categories, as evaluated by the readers across all languages.Courtesy of Dr. Robert Terzis et al and the EJR.

For the analysis, the reports were categorized into subspecialties: cardiovascular, neuro, musculoskeletal, thoracic, and abdominal. No significant differences were found in results for the subspecialties; there were no significant differences judged in quality among modalities, either, according to the authors.

The mean processing time for translation was a maximum of 25 seconds, ranging from 9 ± 3 seconds for English x-ray reports in English to 24 ± 9 seconds for CT reports in Russian. The mean word count of the original radiology reports was 131 ± 68 words. After translation, the word counts were significantly higher for English, French, and Spanish, but not for Russian (English: 167 ± 82 words, p = 0.016; French: 178 ±88 words, p <0.001; Spanish: 186 ± 91 words, p < 0.001; Russian: 149.9 ± 78.8 words; p = 0.455).

Readability scores were high, with an overall median score of 4. Spanish, English, and French were rated high in readability, with median scores of 4.75, 4.5, and 4.5, respectively ( interquartile range [IQR], 4-5). Russian fared worse, with a median score of 4 (IQR, 3-4).

In rating the translations for completeness, the readers found 91% (363/400) to be complete. They rated 100% of the English, 98% of the French, and 93% of the Spanish translations as complete. Again, the Russian translations were found wanting in comparison, with only 72% judged to be complete.

Ratings for factual correctness offered room for criticism. The readers rated 79% (316/400) of all of the translations to be factually correct. The highest rates were accorded to English (84%) and French (83%), although the difference for Spanish (79%) was not significant. Russian, however, was rated 69%.

The readers noted the most common error type to be in the omission of translating abbreviations (i.e., abbreviations were retained as written, with no translation). In some instances, the retention of abbreviations rendered correctly in one or more languages that shared the abbreviation with German, but not the others. The LLM also struggled with idiomatic phrases that were language-specific and did not translate literally in the context of the report.

Finally, the translations were rated for their potential for harm due to inaccuracy. Potential harm was identified in 4% of the total number of translations. Again, English translation rated best, at 1%, with Spanish at 2%, and French at 4%. Of the Russian translations, 9% were judged as having potential for harm.

Readers classified some translations as potentially harmful due to the issue of untranslated abbreviations, with their risk of omitting important information; in other cases, the translations included information that had been altered in meaning sufficiently to alter what was being communicated, with possible clinical implications -- for example, a mistranslation in Spanish of "partial infarct" instead of "partially demarcated infarct."

The readers rated both quality and usefulness of the translations high overall, with the English and Spanish translations scoring the highest marks (5 for both languages in both quality and usefulness), followed by French (4.5 and 5, respectively), and Russian, predictably, scoring lowest (4 for both)

The authors attributed the significantly better translations of English and the two Romance languages compared with Russian in large part to the relative sizes of datasets available for training the LLM; Russian’s use of the Cyrillic alphabet may also be a factor in the LLM’s performance.

The German team also cautioned that, regardless of the good performance of GPT-4o (and its speed and cost-effectiveness, which could make it an attractive option), its limitations demonstrate the need for human oversight.

Read the full EJR article here.