A widely used artificial intelligence (AI) system trained to interpret chest and musculoskeletal abnormalities failed to pass the U.K. qualifying radiology examinations and was outperformed by human candidates, suggesting AI is not yet ready to replace radiologists, a study posted on 21 December in the BMJ has found.

Researchers chose 300 musculoskeletal and chest x-rays for rapid reporting and compared the performance of a commercially available AI tool with that of 26 radiologists who had passed the Fellowship of the Royal College of Radiologists (FRCR) exam the previous year.

Abnormal adult pelvic radiograph shows increased sclerosis and expansion of right iliac bone in keeping with Paget's disease. This was correctly identified by almost all radiologists (96%) but interpreted as normal by AI candidate (false negative), given that this was not a pathology it was trained to identify. All clinical images courtesy of Dr. Susan Cheng Shelmerdine et al and BMJ.

Abnormal adult pelvic radiograph shows increased sclerosis and expansion of right iliac bone in keeping with Paget's disease. This was correctly identified by almost all radiologists (96%) but interpreted as normal by AI candidate (false negative), given that this was not a pathology it was trained to identify. All clinical images courtesy of Dr. Susan Cheng Shelmerdine et al and BMJ.When uninterpretable images were excluded, the AI candidate achieved an accuracy of 79.5% and passed two of 10 mock FRCR exams, while the average radiologist achieved an accuracy of 84.8% and passed four of 10 mock examinations, noted lead author Dr. Susan Cheng Shelmerdine, PhD, a consultant pediatric radiologist at Great Ormond Street Hospital for Children NHS Foundation Trust in London, and colleagues.

Dr. Susan Cheng Shelmerdine.

Dr. Susan Cheng Shelmerdine.The sensitivity for the AI candidate was 83.6% and the specificity (ability to correctly identify patients without a condition) was 75.2%, compared with 84.1% and 87.3% for the radiologists.

Across 148 out of 300 radiographs that were correctly interpreted by more than 90% of radiologists, the AI candidate was correct in 134 (91%) and incorrect in the remaining 14 (9%). In 20 out of 300 radiographs that over half of radiologists interpreted incorrectly, the AI candidate was incorrect in 10 (50%) and correct in the remaining 10.

"We only looked at how well the AI performed on a set of images, without any human synergy or interaction, but what we really want to know in the future is how AI changes patient outcomes and clinical decisions," Shelmerdine told AuntMinnieEurope.com on 22 December.

Speed and accuracy test

The authors developed 10 "mock" rapid reporting exams, based on one of three modules that make up the qualifying FRCR exam. Each mock exam consisted of 30 radiographs. To pass, candidates had to correctly interpret at least 27 (90%) of the 30 images within 35 minutes.

The AI candidate was Smarturgences v1.17.0, developed by French AI company Milvue and marketed since February 2020. It is used clinically in over 10 European hospitals and has been trained to assess MSK radiographs for fractures, swollen and dislocated joints, collapsed lungs, etc.

Normal pediatric abdominal radiograph interpreted by AI candidate as having right basal pneumothorax with dashed bounding box (false-positive result). This should have been identified as non-interpretable by AI. French translation: positif = positive; doute = doubt; épanchement pleural = pleural effusion; luxation = dislocation; négatif = negative; nodule pulmonaire = pulmonary nodule; opacité pulmonaire = pulmonary opacification.

Normal pediatric abdominal radiograph interpreted by AI candidate as having right basal pneumothorax with dashed bounding box (false-positive result). This should have been identified as non-interpretable by AI. French translation: positif = positive; doute = doubt; épanchement pleural = pleural effusion; luxation = dislocation; négatif = negative; nodule pulmonaire = pulmonary nodule; opacité pulmonaire = pulmonary opacification.Allowances were made for images of body parts that the AI had not been trained in, and they were deemed uninterpretable.

The 26 radiologists were mostly aged between 31 and 40 years, and 62% were women. They slightly overestimated the likely performance of the AI candidate, assuming that it would perform almost as well as themselves on average and outperform them in at least three of the 10 mock exams.

The researchers acknowledged that they evaluated only one AI tool and used mock exams that were not timed or supervised, so radiologists may not have felt as much pressure to do their best as one would in a real exam. But this study is one of the more comprehensive cross-comparisons between radiologists and AI, according to Shelmerdine.

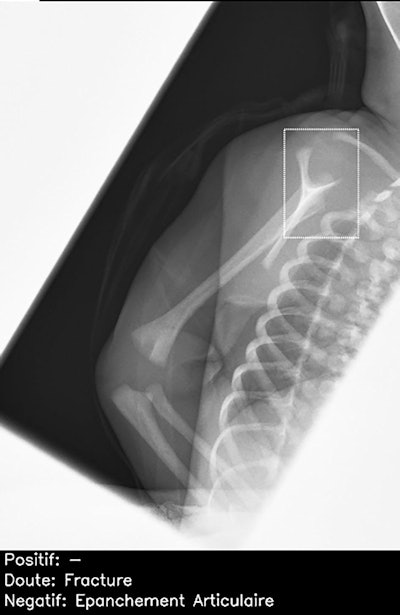

Normal lateral scapular Y view of right shoulder in child, incorrectly interpreted by AI candidate as having proximal humeral fracture (dashed bounding box). This was a false-positive result, which was correctly identified as normal by all 26 radiologists. Note: épanchement articulaire = joint effusion.

Normal lateral scapular Y view of right shoulder in child, incorrectly interpreted by AI candidate as having proximal humeral fracture (dashed bounding box). This was a false-positive result, which was correctly identified as normal by all 26 radiologists. Note: épanchement articulaire = joint effusion.Further training of the AI product is needed, particularly for cases considered "noninterpretable," such as abdominal radiographs and those of the axial skeleton, they said.

Untapped potential of AI

AI may facilitate workflows, but human input is still crucial, noted Vanessa Rampton, senior researcher and chair of philosophy ETH Zurich, and Athena Ko, resident physician at University of Ottawa, Canada, in a linked editorial entitled "Robots, radiologists, and results."

They acknowledge that using AI "has untapped potential to further facilitate efficiency and diagnostic accuracy to meet an array of healthcare demands," but say doing so appropriately "implies educating physicians and the public better about the limitations of artificial intelligence and making these more transparent."

Research in this subject is buzzing, and this study highlights that passing the FRCR examination still benefits from the human touch, they noted.

Looking to the future

Shelmerdine and her colleagues are now collaborating with academic and commercial partners in pediatric imaging, including pediatricians, emergency doctors, nurses, radiographers, and radiologists.

"We will be finding out whether clinical decisions made without AI versus after AI opinion changes and how this might affect patients when AI becomes more widespread," she said.

Milvue AI company is improving the training of their AI model, and it hopes an updated version of the product can interpret spine radiographs; at the moment spine, dental, facial, and abdominal radiographs cannot be interpreted by the AI tool.

"We hope to retest the new AI model again in the future and see whether it can improve in test scores and pass more mock examinations!" said Shelmerdine, who is principal investigator of Fracture Study, the National Institute for Health and Care Research-funded U.K. project looking at how AI can be used to interpret pediatric imaging.

"We are developing AI algorithms to determine how we can better assess pediatric fractures on radiographs and also the impact this has on human decision-making in clinical practice," she said.