As access to digital medical data becomes more widespread in tandem with the growing development of artificial intelligence (AI) algorithms, the implementation of safeguards like anonymization, pseudonymization -- as promoted by the European Society of Radiology -- and encryption are essential to ensure patient safety, according to informatics expert Dr. Erik Ranschaert, PhD.

Dr. Erik Ranschaert, PhD.

Dr. Erik Ranschaert, PhD."Personal data that is fully anonymized is exempt from the requirements of the GDPR (the European Union [EU] General Data Protection Regulation) as the data is no longer identifiable to the individual and therefore carries no risk to their rights and freedoms. Properly anonymized data can in theory be disclosed without breaching GDPR. No permission from individuals is needed," he told attendees at the five-day National Imaging Informatics Course-Radiology (NIIC RAD), hosted online from 27 September to 1 October by the Society for Imaging Informatics in Medicine (SIIM).

Both the U.S.'s 1996 Health Insurance Portability and Accountability Act (HIPAA) and GDPR are similarly clear about safeguards required for patient data used for research. One key safeguard is that retrospectively and prospectively gathered data need deidentification. In Europe, GDPR proposes technical and organizational measures to facilitate the use of data in the context of research, public health, biobanks and analytics of big data.

Ranschaert, who is immediate past president of the European Society of Medical Imaging Informatics (EuSoMII) and visiting professor at Ghent University in Belgium, spoke about protection of patient data in the EU versus the U.S. and outlined the requirements of HIPAA and the GDPR, which was introduced in 2018. GDPR constitutes the toughest data privacy and security law in the world, he said. He also pointed to data protection areas that could be improved.

Conflicting objectives in the GDPR, such as ensuring privacy rights for personal data versus providing adequate access to it for research and reasons of public health, has necessitated the provision of several derogations on patient data. EU member states may apply for specific exemptions but still must meet all GDPR requirements, noted Ranschaert.

Medical research

For posting radiology data in open-source research, the DICOM metadata can be removed completely or replaced by another format such as Neuroimaging Informatics Technology Initiative (NIFTI) which retains only voxel size and patient position. The disadvantage, however, is that the available data lose information that could be valuable for research purposes or for developing bias-free algorithms (evaluation of properties of dataset becomes difficult). Using volumetric acquisition and 3D reformatting, soft tissue kernels allow facial reconstruction. Ranschaert pointed to a Mayo clinic study that revealed that in 85% of cases, standard facial recognition software could identify the research volunteers based on their MRI reconstruction.

Full anonymization is often difficult to obtain. And for research, it is often not desirable. For example, even though using skull-stripped images removes identifiable facial features from the image, it can have a negative impact on the generalizability of the models developed to train AI algorithms using this data, according to Ranschaert.

"Absolutely reliable protection of individual digital biometric data, specifically imaging data, is almost impossible. For example, in an MRI of the head, anonymization would require removal of all written information from the file including DICOM metadata and use of software to irreversibly scramble soft tissue structures of the individual's face," he told course attendees.

In most cases data can only be partially anonymized, or pseudonymized, and therefore will still be subject to data protection legislation.

"Privacy remains of paramount importance, and we need to continue to develop new mechanisms for protecting it, in terms of defining the purpose of the data, adhering to existing ethical standards and using the right safeguards such as pseudonymization," he said. "Furthermore, existing exemptions [for research purposes] should never result in patient data being processed for other purposes by third parties, e.g., employers, insurance, banking companies, commercial enterprises."

Data breaches

Data breaches can happen frequently, not only because of hackers but also from incorrect administrative procedures, accidental disclosure, lost devices, or insufficient disposal, Ranschaert explained.

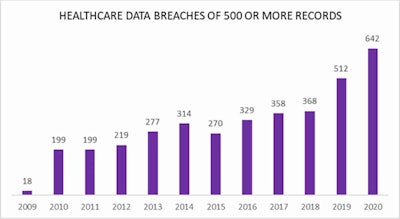

Number of data breaches reported to the U.S. Office for Civil Rights. Courtesy of HIPAA Journal.

Number of data breaches reported to the U.S. Office for Civil Rights. Courtesy of HIPAA Journal.He pointed to an HIPAA journal report revealing that between 2009 and 2020, a total of 3,705 healthcare data breaches of 500 or more records were reported to the Human and Health Services' (HHS) Office for Civil Rights.

Those breaches resulted in the loss, theft, exposure, or impermissible disclosure of 268,189,693 healthcare records, which equated to more than 81.72% of the population of the U.S. In 2018, healthcare data breaches of 500 or more records were being reported at a rate of around 1 per day. In 2020, that rate had risen to an average number of breaches per day of 1.76.

Under the HIPAA, patients must be notified without unnecessary delay, and if the breach affects 500 records or more, the HHS secretary has to be notified within 60 days. The GDPR meanwhile communicates data breach to the individual or patient if there is a high risk to the person's rights and freedom, and notification to the supervisory authority by the hospital's DPO must take place within 72 hours, according to Ranschaert.

In the most serious cases, the HIPAA imposes a fine of $50,000 U.S. dollars (43,000 euros) per violation, to a maximum of $1.5 million (1.3 million euros) per year, while under the GDPR, the fines are higher, up to 20 million euros or 4% of the global turnover of the institution, if this represents a higher figure, he continued.

U.S.- European variations

Course participants heard how in the EU, patients have the right to access their medical data, and data should also be transferable. In reality, however, the ability to access their electronic medical records varies greatly from one country to another. Although some citizens can access part of their electronic health records at national level or across borders, many others have limited digital access or no access at all.

For this reason, the EU Commission has made recommendations that will facilitate access across borders that is secure and in full compliance with the GDPR. Also, this statement provides useful background.

In the U.S. -- with the exception of New Hampshire -- the performing entity (i.e., the hospital or physician) owns it.

A number of important differences also exist between data protection legislation in Europe and the U.S., and these are listed in the table below.

| HIPAA | GDPR | |

| Governance | Health data only | All personal data |

| Consent | No consent is required from patients for release of data to third parties such as insurance companies. | Explicit consent is required for any interaction with protected health information (PHI) other than direct patient care. |

| Privacy | All patients have a right to a copy of health data, but not for free. | All patients have the right to a free copy of health data (portability) and a limited right to rectify and erase data. |

| Security | There are secure measures to ensure confidentiality. Breach notification is 60 days. | There are secure measures to ensure confidentiality. Breach notification is 72 hours. |

| Penalties | Fines are up to $1.5 million U.S. (1.3 million euros). | Fines are up to 20 million euros or 4% of global annual revenue if this is a higher figure. |

Anticorruption frameworks

In response to a question from an attendee about the controversy surrounding the Memorial Sloan Kettering and Paige.AI deal that has raised the issue of physicians allegedly profiting from archived pathology material and associated data, Ranschaert noted that hospitals were developing policies in this area and that awareness was growing. He pointed to how hospitals and companies might avoid ethical dilemmas when using archived data in potentially for-profit ventures.

"As hospitals develop more AI tools, they are actively thinking about what policies to establish, but not all centers as yet have a policy on the using and sharing of data for the development of algorithms," he said. "There is a difference between using data for research or in-house developments, and third parties and external parties using it, so it's essential that if you are a hospital confronted with a request from an external party that you do have a policy."

He added that the policy should be part of a framework for adequate data management which includes the presence of a data protection officer. In addition, the ethics committee should always be consulted when using medical data for research or other purposes.

"The main message is that hospitals have to expand their policies and procedures and include the element of data availability for AI development," noted Ranschaert.