The COVID-19 crisis has highlighted the limits of the European Union (EU) General Data Protection Regulation (GDPR) and shown that the EU is not ready to make full use of artificial intelligence, according to informatics expert Dr. Erik Ranschaert, PhD.

"GDPR has strict data protection rules that limit the collection, use, and exchange of data, and thus its ability to jointly tackle the spread of the disease," said Ranschaert, speaking at the AuntMinnie.com 2020 Virtual Conference on 30 April. "Without an EU-led initiative, national initiatives will remain fragmented, making it unlikely that innovative technologies can be fully exploited, particularly in a crisis, when urgent action is a prerequisite and most beneficial."

GDPR still contains too many ambiguities, which prompts many people to adopt an overprotective stance, he added.

Besides this, other issues in Europe still need to be resolved, such as the backlog in artificial intelligence (AI) development, the limited availability of funding for AI research, a technology-adapted legal framework for data exchange, a solid, data-sharing infrastructure, and common broad-based insight and vision, noted Ranschaert, who is president of the European Society of Medical Imaging Informatics (EuSoMII).

To overcome these challenges, hospitals must facilitate data exchange for research purposes and development of new technologies, invest in digital infrastructure to enable smooth and accurate development and integration of AI tools, and collaborate on a pan-European level to provide information to open data repositories for research and education. They also need to look for properly tested, regularly approved AI software and continuously evaluate outcomes, he said.

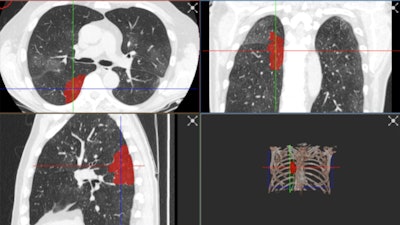

The best AI platforms have a wide range of labeling and segmentation options so that images can be presented correctly. With the RVAI platform from Robovision, the margins of the opacities in the lung are automatically identified by the "adaptive" brush tool. The lesions or abnormalities are simultaneously segmented in three different planes. In this figure, not all visible opacities have been segmented yet. Images courtesy of Dr. Erik Ranschaert, PhD.

The best AI platforms have a wide range of labeling and segmentation options so that images can be presented correctly. With the RVAI platform from Robovision, the margins of the opacities in the lung are automatically identified by the "adaptive" brush tool. The lesions or abnormalities are simultaneously segmented in three different planes. In this figure, not all visible opacities have been segmented yet. Images courtesy of Dr. Erik Ranschaert, PhD.The added difficulty of COVID-19 lung abnormalities is they can be subtle and difficult to differentiate from other conditions. Last week, for example, Ranschaert and his colleagues at the Elisabeth-TweeSteden Hospital in Tilburg, the Netherlands, treated a patient with a pneumocystis carinii pneumonia infection. As in COVID-19 cases, this infection can give similar opacification on both sides of the lungs, so it requires great care and can be misdiagnosed, he pointed out.

"In this phase of the crisis, we see typical abnormalities and everybody assumes straight away that this is COVID-19, but we have to keep in mind this is not always the case," he said. "When developing an AI tool, we have to have a good, balanced data set. This means there must be a controlled data set that shows other, similar kinds of pathologies so the AI tool can provide a good probability score that this is COVID-19 or not."

To see how accurate AI is in everyday clinical conditions, it's essential to verify the tool and permanently test the outcomes, Ranschaert emphasized.

Real-world data collection

Another practical difficulty is the need to be fairly selective about data collection.

"We are facing time constraints here," he explained. "We want to develop something that's useful during the crisis. If we start collecting a lot of data, it will take a lot more time to assemble this with the images, but we do need some basic clinical information and connect these to the images. We also need to overcome all administrative and contractual issues with hospitals before we can start receiving data. These are considerable obstacles, especially in times when it is necessary to work fast."

For practical reasons and speed, Ranschaert favors pseudoanonymization, whereby each patient is given a code that links with the images and is still compliant with GDPR, rather than full anonymization.

Sharing of patient data can also be tricky in some countries. In Italy, for example, transferring patient images outside of the hospital is not allowed. When a research project is clearly defined, noncommercial, and has ethical approval, this is generally less problematic because GDPR permits some flexibility in such cases on data sharing, he said.

"If we want innovation like AI tools to flourish and help us in a crisis, then adapting the legislation is needed," Ranschaert commented. "There is an urgent need for unambiguous guidelines in order to apply the principles of the GDPR within innovation and research."

For training an algorithm, he thinks chest CT images with a slice thickness of 3 mm or less provide sufficient image quality.