Artificial intelligence (AI) algorithms can be used to thwart cyberattacks on medical imaging equipment, but they may also be used to alter images, making attacks very difficult for radiologists to detect, according to new analysis presented at RSNA 2018 in Chicago.

Investigators from Israel described how their AI model can alert users if a CT scanner received a malicious command from a cyberattack, while researchers from Switzerland showed how their algorithm could insert or remove cancer-specific features on mammograms.

In their presentation, researchers from Ben-Gurion University of the Negev in Beersheba, Israel, discussed areas of vulnerability and ways to increase security on CT equipment. They also showed how hackers might bypass CT system security mechanisms to manipulate scanner behavior, such as changing radiation dose. To detect anomalies and prevent cyberattacks, the researchers used various machine-learning and deep-learning methods to train an algorithm with actual commands recorded from real devices.

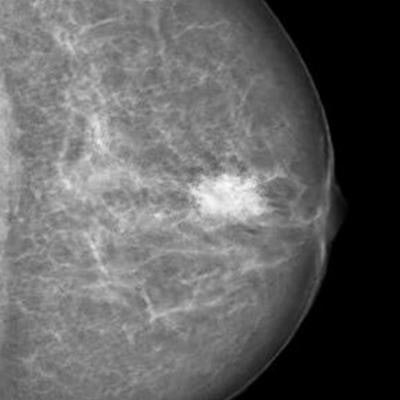

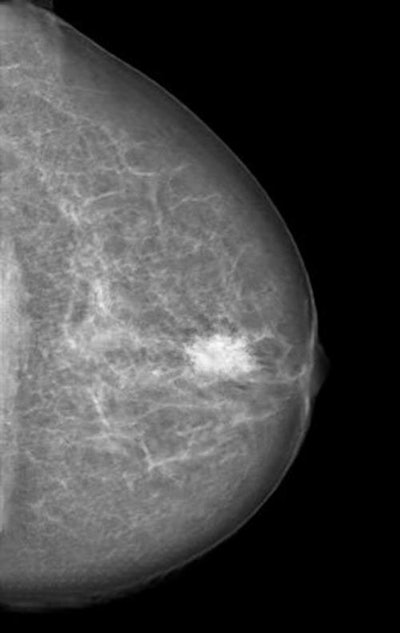

Mammogram manipulated with a neural network-generated mass. Image courtesy of RSNA and Dr. Anton Becker.

Mammogram manipulated with a neural network-generated mass. Image courtesy of RSNA and Dr. Anton Becker.The model then learned how to recognize normal commands and to predict if new commands are legitimate or not, according to the researchers. After the system detects a malicious command sent to the device by an attacker, it alerts the operator before the command is executed.

"In cybersecurity, it is best to take the 'onion' model of protection and build the protection in layers," said presenter Tom Mahler, PhD, in a statement from the RSNA. "Previous efforts in this area have focused on securing the hospital network. Our solution is device-oriented, and our goal is to be the last line of defense for medical imaging devices."

Mahler noted that there's no indication that these types of cyberattacks have actually occurred.

"If healthcare manufacturers and hospitals will take a proactive approach, we could prevent such attacks from happening in the first place," he said.

Meanwhile, researchers from University Hospital Zurich and ETH Zurich in Switzerland described how they trained a cycle-consistent generative adversarial network (CycleGAN) to convert mammography images showing cancer into normal studies, as well as to convert normal control images into studies that show cancer. After three radiologists reviewed all of the images, none could reliably distinguish between the genuine and the modified cases.

"As doctors, it is our moral duty to first protect our patients from harm," said presenter Dr. Anton Becker in a statement. "For example, as radiologists we are used to protecting patients from unnecessary radiation. When neural networks or other algorithms inevitably find their way into our clinical routine, we will need to learn how to protect our patients from any unwanted side effects of those as well."

Attacks that could remove cancerous lesions from the image and replace them with normal-looking tissue won't be feasible for at least five years, Becker noted. He urged the medical community and hardware and software vendors to address the issue while it's still a theoretical problem.