VIENNA -- Mammographic image quality affects AI performance more than radiologists, according to research presented on 27 February at ECR 2025.

In her talk, Sarah Verboom from Radboud University Medical Center in Nijmegen, the Netherlands, discussed her team’s findings, which showed that this trend for interpreting mammograms applied even when the performance of radiologists was unaffected.

Sarah Verboom presents her team's research at ECR 2025, showing that AI's performance is affected by mammographic image quality more than radiologists.

Sarah Verboom presents her team's research at ECR 2025, showing that AI's performance is affected by mammographic image quality more than radiologists.

“We saw that AI [systems]…trend downward when the image quality goes down,” Verboom told AuntMinnieEurope.com. “So, they have more quality issues than radiologists have.”

While imaging AI proponents say the technology is on par with radiologists in interpreting mammograms, AI can be confused by things that do not normally confuse humans.

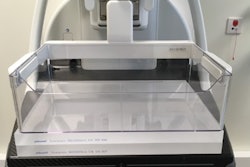

Verboom and colleagues studied how common image quality issues in mammograms change the performance of AI-based mammography interpretation systems compared with expert breast radiologists.

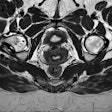

They simulated five common image quality issues on 80 digital screening mammograms, including the following: spatial resolution, quantum noise, high and low contrast, and correlated noise. Of the total mammograms, 40 were malignant, 20 were benign, and 20 were normal. The team simulated each issue at the lowest quality that was acceptable to radiologists, a realistic quality that was not acceptable, and a reference-level quality.

The study also included 13 expert breast radiologists from five countries and two commercial AI systems, all of whom assessed the mammograms and scored them with a probability of malignancy and a recall decision. The researchers acquired the AI recall decision by matching the specificity on standard-quality images to that of the radiologists.

While the radiologists held a collectively steady performance throughout all image qualities, both AI systems experienced decreases in area under the curve (AUC) values.

| Comparison between AI systems, radiologists by image quality | |||

|---|---|---|---|

| Image quality | Radiologists | AI system A | AI system B |

| Reference images | 0.76 | 0.72 | 0.95 |

| Acceptable-quality images (AUC) | 0.76 | 0.68 | 0.91 |

| Unacceptable-quality images (AUC) | 0.76 | 0.61 | 0.87 |

Radiologists gave the same recall decision in 83% of acceptable images and 82% of unacceptable-quality images. However, system A gave the same recall decision in 75% of acceptable cases (p = 0.06) and 68% of unacceptable cases (p = 0.001). System B meanwhile did the same in 80% of acceptable cases (p = 0.47) and 78% of unacceptable cases (p = 0.27).

Verboom said that the team plans to test this hypothesis on a larger dataset to see how AI systems behave on a bigger scale.

“[We also want] to see how it [AI] behaves there and see the differences in work quality issues and also see we can have the models be aware of that so they can flag when they’re not capable of handling these different qualities,” she told AuntMinnie.com.

Read AuntMinnieEurope.com's entire coverage of ECR 2025 on our RADCast.