With its second AI Runner project, a group from the Research and Practical Clinical Center of Diagnostics and Telemedicine Technologies in Moscow is edging closer to finding artificial intelligence (AI) solutions for cancer detection that really work.

Dr. Victor Gombolevskiy, PhD, part of the AI Runner project.

Dr. Victor Gombolevskiy, PhD, part of the AI Runner project.The researchers took a data sample from the Moscow Lung Cancer Screening Trial and approached all the companies at ECR 2019 claiming to have a market-ready neural network for lung nodule detection in chest CT. The goal was to compare their solutions with real-life cases.

"AI in radiology is a hot -- and possibly overhyped -- topic. Each company promises us a Holy Grail, but how much of it is true?" team member Dr. Victor Gombolevskiy, PhD, told AuntMinnieEurope.com. "Because training and validation bias may affect diagnostic accuracy, we wanted to test various solutions with the same dataset for a real, down-to-earth comparison between them."

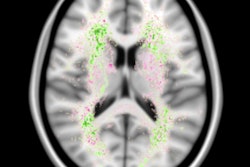

The sample consisted of 100 anonymized ultralow-dose chest CT studies. The team believes that lung cancer screening with an effective dose of less than 1 mSv could decrease long-term risks. The dataset included verified lung cancer cases as well as benign nodules with follow-up at 600 days, indicating little likelihood of cancer.

The researchers conducted a similar experiment at ECR 2018 on four cases of pulmonary nodules. They found that AI-based solutions worked with ultralow-dose chest CT studies and identified nodules in patients up to 125 kg. The goal was automated detection of nodules larger than 4 mm without false-positive findings in the following:

- A scan without iterative reconstruction with lung nodules

- A scan without iterative reconstruction with severe emphysema and lung nodules

- A scan with iterative reconstruction, severe emphysema, and lung nodules

- A scan without iterative reconstruction in an overweight patient (125 kg) with a single nodule

For this second project, however, it was crucial to test a larger dataset to evaluate AI-based solutions in the detection of both benign and malignant pulmonary nodules with various morphologies, according to Gombolevskiy.

The research team was larger this time and also included the center's CEO, Dr. Sergey Morozov, PhD, who recently co-edited the book Artificial Intelligence in Medical Imaging (with Drs. Erik Ranschaert, PhD, and Paul Algra). Also bolstering the team were Dr. Ivan Blokhin and Dr. Valeria Chernina. The researchers used three hard drives for testing a greater number of developers, the idea being that some would have exaggerated their solution's capabilities, while others would be unaware of their achievements or potential.

Searching for AI's Holy Grail: solutions that work. The Russian team, pictured here, tested 12 vendor solutions at ECR 2019 and plans to run a similar project at RSNA 2019.

Searching for AI's Holy Grail: solutions that work. The Russian team, pictured here, tested 12 vendor solutions at ECR 2019 and plans to run a similar project at RSNA 2019.A total of 12 companies responded to the Russian challenge at ECR 2019. Six of them agreed to test their solutions with the Moscow dataset, and although the data are still being processed, at least two companies showed promise, according to the researchers.

"Compared to last year's AI Runner (ECR 2018), several solutions did not perform very well due to false-negative or false-positive results," Gombolevskiy noted. "We believe that the results suggest a bias of training datasets. We will follow up with statistical analysis."

In the second AI Runner, the team observed the following:

- All companies could be divided into three categories: small companies interested in testing to gain recognition, medium-sized companies willing to provide trial access to their platforms, and, finally, big players that refused due to program limitations or security concerns.

- Sometimes the same product was used on different stands, and team members were routed from one to another.

- Few companies were ready to analyze the dataset onsite, and many expressed their surprise at the sight of an external hard drive containing the dataset.

- All companies were surprised to hear about the Moscow Lung Cancer Screening trial, which includes 11,000 participants and several outpatient clinics that are connected to the Unified Radiological Information Service.

- AI-based solutions were present throughout the exhibition.

- Some booths were crowded or presented computer-aided detection (CAD) for other applications such as mammography.

The AI Runner projects springboarded from a 2017 pilot project for lung cancer screening with ultralow-dose CT. There were several questions behind the research, the original driver being to ascertain how noisy images affected AI.

"Our scans tend to have higher noise levels compared to conventional low-dose CTs used in the United States and Europe," Gombolevskiy said. "Therefore, we were curious about how image noise levels may affect the AI-based algorithms."

More recently, other areas of interest have surfaced: In 2018, the U.S. Food and Drug Administration (FDA) lowered the requirements for licensing CAD solutions. The researchers believe that a new wave of market-ready, FDA-approved products is sure to follow. With this in mind, because the capabilities of such algorithms may be overestimated, misleading both researchers and potential end users, the team wanted to clarify the capacity and performance of different systems. The researchers stressed that they also hope to raise awareness of the fact that product performance will depend on the nuances of training databases.

"We believe that comparative testing with a single database will produce valid results and help developers," Gombolevskiy said. "We want potential buyers to have more information before purchasing an AI-based algorithm for their practice. We will also provide feedback to the developers to increase the accuracy of their solutions."

Project winners will be named, and the team plans to submit a manuscript for publication. The researchers also plan to conduct a third AI Runner project at RSNA 2019.