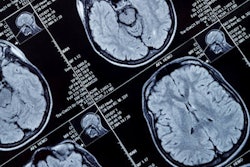

Spanish researchers have built a technique for the early diagnosis of Alzheimer's disease based on the use of a deep-learning algorithm. The method replaces visual inspection of MRI and PET data with comprehensive image analysis of affected brain tissues that exploits their 3D nature, according to the article in the International Journal of Neural Systems.

The team from the University of Malaga and the University of Granada developed multiple brain tissue classification methods based on deep learning and applied them to brain regions on MRI. The technique separates gray matter data from various brain regions into 3D patches, which are then used to train neural networks. The resulting classification method, validated on a large public dataset, showed classification accuracy of 95% for differentiating controls from Alzheimer's patients.

"We used a different approach based on deep-learning methods rather than pure statistical learning techniques," explained Andrés Ortiz, PhD, in an email to AuntMinnieEurope.com. "Instead of using classical statistical methods to compute relevant features from images, we used deep neural networks, which discover relevant information for diagnosis. In other words, the artificial neural system learns what differentiates the different types of images (e.g., cognitively normal and Alzheimer's)."

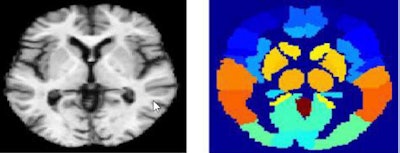

Above, MRI image (left), MRI atlas (right) used for deep learning-based image analysis. Below, PET image (left) and PET atlas (right). Images courtesy of Andrés Ortiz, PhD.

Above, MRI image (left), MRI atlas (right) used for deep learning-based image analysis. Below, PET image (left) and PET atlas (right). Images courtesy of Andrés Ortiz, PhD.This feature extraction process has proved effective as the support vector machine classifier provides good classification results, he added.

Difficult diagnosis

Despite great progress in diagnosing Alzheimer's disease, it remains a challenging task, especially in the early stages, said Ortiz, from the University of Malaga's engineering and communications department. As Alzheimer's advances, he said, brain functions become increasingly affected and it becomes more difficult to contain the neurodegenerative process. The cause of Alzheimer's remains poorly understood, and what drugs are available can only slow progression of the disease, at best. Still, early diagnosis is crucial to treat the disease effectively and may help to develop new drugs.

"Our technique allows us to have a pretrained system that can also be incrementally trained with new images that can aid in the diagnosis process," he wrote.

Advanced imaging systems offer a lot of information that is difficult to extract manually -- as is usually the case when evaluating different tissue on MRI, based on visual ratings performed by the observer along with other objective steps.

The classification methods based on deep-learning architectures are applied to brain regions defined by a technique called automated anatomical labeling (AAL). Gray matter images are divided into 3D patches according to the regions defined by the AAL, and then used to train deep belief networks (DBNs).

A deep belief network can be seen as a neural network composed of several hidden layers with connections between the layers, but not between units between each layer, the authors explained. Their multilayer architecture tries to mimic the model used in human biology, because it is believed the human brain organizes information in a hierarchical fashion -- from simple to more abstract representations along with the relationships between the different layers, Ortiz and colleagues explained.

Comparing classification

In this study, two deep belief networks and four different voting schemes were implemented and compared, offering a powerful classification architecture where the discriminative features are computed in an unsupervised manner, the team wrote (Int. J. Neural Syst., November 2016, Vol. 26:7, pp. 1650025).

Previous researchers found they were unable to properly train deep architectures, and as a result, largely abandoned the use of multilayer neural architectures in favor of support vector machines, which were seen as using an optimization technique to compute the weight associated with each image feature. But since 2006, the development of new training algorithms has made multilayer neural architectures popular again.

In this paper, a set of deep-belief networks was trained from each brain region to create an ensemble of deep-belief networks. The idea was used to compare two different alternatives, a voting-based deep-belief network and a support vector machine based deep-belief network.

Part of the BioSIP group that worked on the study. Left to right: Maria García-Tarifa, Andrés Ortiz, Jorge Munilla, Alberto Peinado, Guillermo Cotrina.

Part of the BioSIP group that worked on the study. Left to right: Maria García-Tarifa, Andrés Ortiz, Jorge Munilla, Alberto Peinado, Guillermo Cotrina."The first consists in the use of an ensemble of DBN classifiers, while the latter is based on the use of DBNs as feature extractors, making use of their capability of representing the information at different abstraction layers," Ortiz et al wrote.

In all, the authors compared different deep belief network-based alternatives -- two using voter methods and two using support vector machine methods (DBN-voter and FEDBN-SVM). The first used an ensemble of deep belief network classifiers, while the latter was based on the use of deep-belief networks as feature extractors, making use of their capability of representing the information at different abstraction layers, the group wrote.

Using the four different methods to fuse the decisions of the individual classifiers in the deep-belief method showed that the best results were obtained using the support vector machine voter method. However, the best classification outcomes were obtained using deep-belief networks as feature extractors (FEDBN-SVM). This method provided higher classification performance as measured by area under the curve (AUC) compared with the use of discriminative deep-belief networks as classifiers.

The classification architecture provided accuracy as high as 90% and an AUC of 0.95 for classification of controls versus Alzheimer's disease, accuracy of 84% and AUC of 0.95 for classifying mild cognitive impairment from Alzheimer's disease, and accuracy of 83% and an AUC of 0.95 for classifying controls versus mild cognitive impairment, the team wrote.

Mild cognitive impairment (MCI) classification is much more difficult than distinguishing from controls from Alzheimer's patients, Ortiz noted. "Thus, we are focused on this problem," he said. "In fact, we are developing new methods also based on deep-learning architectures to model the progression of the disease. This aims not only to classify a subject (e.g., normal, Alzheimer's, or MCI), but also to figure out the prognosis."

The method offers important advances over visual inspection, he added.

"The evaluation of the different tissues found on a brain MRI is usually done through visual ratings performed by experts and other subjective steps, which is time-consuming and prone to error," Ortiz wrote.

For example, the human visual system is not able to differentiate more than 30-35 gray levels while high-resolution MRI can be 16-bit color depth, he wrote. This technique also processes 3D MRI, rather than simple slice-by-slice imaging, thereby taking advantage of the 3D nature of the images.

One of the most important sources of information in medical imaging is the relationship of a voxel with its neighbors, Ortiz wrote. Three-dimensional processing allows the exploration of 3D neighborhoods. The vast amount of data contained in MRI images require the use of advanced techniques to exploit the information contained on them, he wrote.

"The methods we are currently developing do not use SVM as classifiers," he noted. "We are focused on deep-learning systems, used for modeling, feature extraction, and classification. We are betting on this technique as it has an incredible potential in image processing. Moreover, we are also working to include other biochemical biomarkers -- not only imaging biomarkers -- to improve the diagnosis and prognosis accuracy."